The American anti-austerity tradition

The financialization of the U.S. economy has directed U.S. savings into more and more unproductive speculative investments. Consumption has become increasingly buoyed by unsustainable “indebted demand.” And the threat of secular stagnation due to inequality looms increasingly large against the backdrop of changing demographics and environmental degradation. To many, these are the novel economic conditions that are confounding conventional wisdom, driving an explosion of questioned assumptions.

But in fact, the United States has been here before. In the early 20th century, these concepts were not just familiar to economists and policymakers—they also formed the core of a pragmatic U.S. economic tradition stretching back deep into national history. Translated into practice by the New Deal nearly a century ago, they laid the groundwork for the post-World War II economic growth of the ensuing decades.

That may sound surprising since the victory of neoliberalism beginning in the 1980s has so totally vitiated our historical imaginations, it can be hard to picture the American past as anything other than a laissez faire frontier society. But as we face mounting threats to our economy and society, learning to recognize our unfamiliar history can give policymakers the confidence they need to move forward on the public investments necessary to save the environment, fight inequality, and boost growth today.

Beyond place-based: Reducing regional inequality with place-conscious policies

June 30, 2021 2:00PM – 3:30PM

Three early 20th century perspectives on the U.S. economy

The economic convulsions that followed the end of World War I and the Spanish flu pandemic in the early 20th century led some U.S. intellectuals, economists, and policymakers alike to question the consequences of the growing economic inequality and low investment levels that characterized the period. Many of them grasped the connection between economic inequality, economic growth, and the importance of robust aggregate demand.

Their ideas have a great deal to teach us now. Eavesdropping on their conversations can help connect these problems today with prior experiences in the American past. Specifically, there are at least three coherent perspectives on the U.S. economy that were developed during this time that are worth revisiting. Each offers its own diagnosis and prescription; none involves embracing austerity and finance. Rather, they center on public investments to equitably benefit U.S. workers and their families. Specifically:

- Underconsumption theory. When workers can’t afford to buy everything they produce, it creates imbalances in the economy that are papered over by unsustainable extensions of credit.

- Channel finance. When financial markets fail to support productive investment, they encourage bubbles, hurt workers, and slow growth.

- Secular stagnation. When the state fails to boost investment, mature capitalism suffers from a congenital insufficiency of aggregate demand. Mass unemployment creates social and political antagonisms that are a danger to democracy.

Each of these perspectives over time coalesced into a set of policy prescriptions, some of which were implemented then and all of which offer important lessons for policymakers today. Let’s briefly examine each in turn.

Underconsumption theory

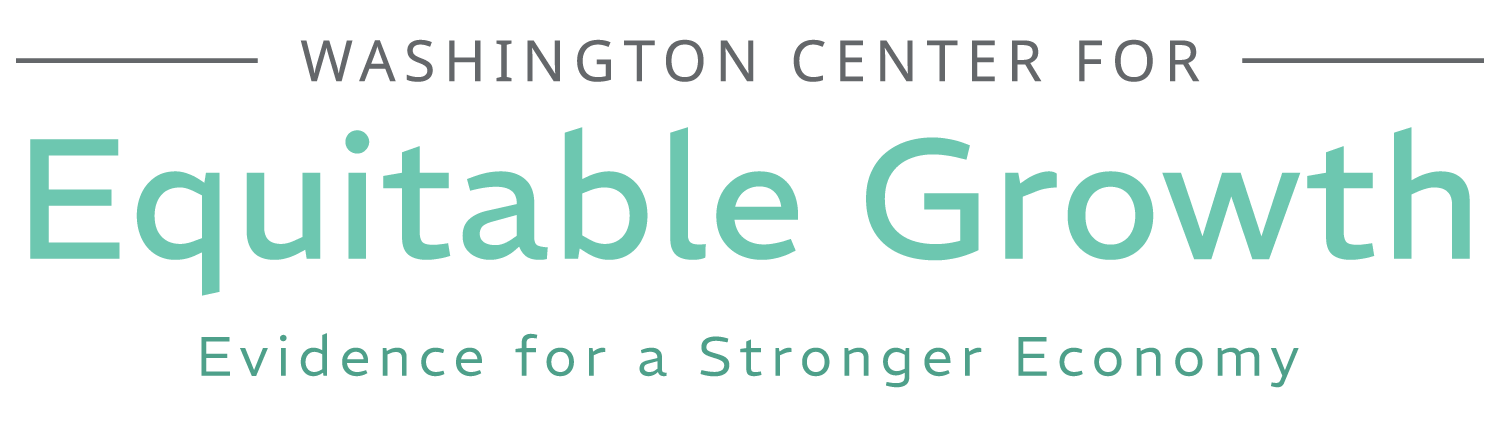

The core claim of underconsumption theory is straightforward: When workers can’t afford to buy everything they produce, it creates imbalances in the economy, including global trade imbalances, that are papered over by the extension of unsustainable debt and credit. A few years later, the bubble bursts and a financial crisis results. (See Figure 1.)

Figure 1

This theory was developed and popularized by the Pollak Foundation of Economic Research, a think tank funded by Waddill Catchings, the senior partner and largest shareholder at the investment bank Goldman Sachs. His co-author, William Trufant Foster, was an economist and educator, best known for authoring the blueprint for Reed College and serving as its first president. Their diagnosis of the U.S. economy was that the maldistribution of income and finance led to the economic fragilities that caused the Great Depression. Their solutions were to raise wages, organize labor, and invest in the social safety net.

If that perspective sounds radical today, it didn’t prevent the Pollak Foundation from gaining traction among a wide swath of political, academic, and business leaders in the 1920s and 1930s. Catchings, Foster, and the Pollak Foundation inspired some of the key leaders of President Franklin Delano Roosevelt’s New Deal. By 1935, the Pollak Foundation counted Secretary of Agriculture Henry Wallace, Sen. Robert Wagner, and Secretary of War George Dern among its inner circle, as well as leading academics such as Irving Fisher, Ernst Lindley, and Paul Douglas.

Foster and Catchings also used their network from Goldman Sachs to draw in a surprisingly wide amount of support from business. Among their financial backers were the U.S. Chamber of Commerce, the National Industrial Conference Board, and the influential McClure Newspaper Syndicate.

Many business leaders accepted core tenets of underconsumption theory, but they balked at the idea of government investment and collective bargaining. The so-called Fordist wage, initially just an attempt to reduce employee turnover, eventually came to symbolize the effort to privately resolve this problem. Henry Ford’s insight was that only if his workers had the wages to pay for his cars could his company succeed. Without demand for goods and services from workers, no level of productivity would be capable of generating sustainable profits.

The limitation of Ford’s solution, of course, was that his workers mostly did not spend their high wages on Ford cars. They spent it on groceries, clothing, entertainment, and other aspects of the good life. Ford’s high wages were generating externalities for the rest of the economy, which his corporation in particular did not capture. The private-market idea that firms should just raise their wages out of enlightened self-interest was hamstrung by a coordination problem: Only if all firms simultaneously increased their wages would that boost demand for Ford cars sufficiently to justify that wage hike, but each firm has an incentive to shirk this collective obligation.

Facilitating this coordination was the job of unions and government. The Fair Labor Standards Act instituted higher wages through the first national minimum wage law and mandatory overtime pay. The National Labor Relations Act empowered organized labor to demand even higher wages. And the Reconstruction Finance Corporation used public funds to invest in key infrastructure to employ labor. These laws are often remembered as efforts to create a more egalitarian economy, but their supporters also saw them as instruments of macroeconomic stability and growth. As World War II price czar Chester Bowles argued in 1946, steadily increasing minimum wage laws are necessary “to prevent the backward businessmen from undermining the wage structure and from living off the purchasing power provided by the payrolls of businessmen who pay decent wages.”

As recently as the 1990s, the conservative economist Greg Mankiw estimated that something like 50 percent of Americans were living hand-to-mouth and consumed all their income—a sure sign that the forces of underconsumption were at work. The situation is likely even worse today, after the Great Recession of 2007–2009, the ensuing decade of tepid wage gains, and then, the onset of the coronavirus recession. Net productivity has grown by almost 70 percent since 1979, but hourly wages are up only 12 percent.

The result is a massive gap between what U.S. workers are producing and what they can afford to buy. As in the 1920s, the gap has been papered over by ever-growing consumer debt. At the most fundamental level, returning to sustainable prosperity in the years to come will require broad-based income gains for those who have lost out over the past 40 years.

The earlier generation of pragmatic economists and economic policymakers also understood that savings are only good for the U.S. economy if they result in productive investment. Otherwise, some combination of unemployment, slower growth, and changes in interest rates is the result. When economic inequality is high, the savings of the rich go up while the savings of the rest of us go down, and the wealthy lend to the less wealthy instead of engaging in fixed investment. This kind of credit maintains consumption in the short run at the expense of employment-generating investment.

Channel finance

In addition to the fundamental solution to this problem—reducing inequality—these thinkers also recommended channeling finance to break out of this vicious circle. One of those men was Marriner Eccles, a famous Utah banker and then-chairman of the Federal Reserve after 1935. Inspired by Foster and Catchings, Eccles became involved in the 1933 Emergency Banking Act, and wrote the 1934 National Housing Act and the 1935 Banking Act—all of which helped steady U.S. financial institutions, bolster the public’s trust in financial markets, and prepare the ground for more productive investments by U.S. financial institutions.

Eccles’ key insight was that savings were only economically useful if they were channeled into productive investment: building houses, paving roads, and upgrading factories. Left to their own devices, Eccles knew, financial institutions in an unequal society would tend to shy from these productive investments because of the risk inherent in their long time horizons and would favor less risky consumer credit instead. It was up to the state to channel savings into productive investments through interventions in the financial sector.

The Federal Housing Administration did this by providing government insurance to banks issuing mortgages in case the loans defaulted, making them less risky and hence bringing the interest rate down. The FHA both allowed for low down-payment mortgages and created a national secondary market for loans by standardizing them—a process that changed the way mortgages were issued. Instead of the then-usual 5-year balloon mortgage (with borrowers taking on the risk of either refinancing the loan or paying it off at the end of the term), the FHA sponsored the now-standard 30-year mortgage and allowed for low down-payment loans. By subsidizing mortgage lending through its insurance program, the FHA channeled savings into the construction of new houses while also making homeownership more widely available.

Other steps to regulate financial markets were instituted by the Fed, under Eccles’ personal direction. Regulation Q put a ceiling on the interest rate that financial institutions could pay on deposits. Since there is a correlation between risk and return, this gave the less risky financial institutions a chance to compete. In later years, this was followed by closer supervision and limits on bank transactions with their affiliates, including increased collateral requirements, making them safer.

Then, there was the creation of two key financial regulatory institutions to further buoy public confidence in U.S. financial markets. One was the Federal Deposit Insurance Corporation; the other was the Securities and Exchange Commission. Finally, the Glass-Steagall Act prohibited investment banking and commercial banking within the same financial institutions in the United States, preventing banks from investing in speculative securities or pumping those securities to their clients.

The impact of these interventions was a long period of financial market stability, in which productive, long-term investments were attractive uses of capital. Different types of financial institutions, such as thrifts, savings and loan companies, investment banks, and commercial banks, channeled savings in the U.S. economy into specific U.S. investments.

Importantly, Eccles and his friends were bankers themselves, and they didn’t want to hold down finance or make banking unprofitable. Rather, they sought to treat the flow of funds like a river, a public resource that has to be managed, contained, locked, damned, diked, and stabilized for the common good. From their perspective, the point was to direct the river, not to dry it up.

Secular stagnation

“Secular stagnation” is a term Harvard University economist Alvin Hansen used to describe an economic condition where the number of profitable investment opportunities is not sufficient to absorb all the savings in the economy. During the Great Depression, Hansen worried that this would become the natural state of the U.S. economy, as the closing of the Western frontier, declining population growth rates, and the slower pace of technological growth meant there would simply be fewer sites for capital to invest than there had been in the 19th century.

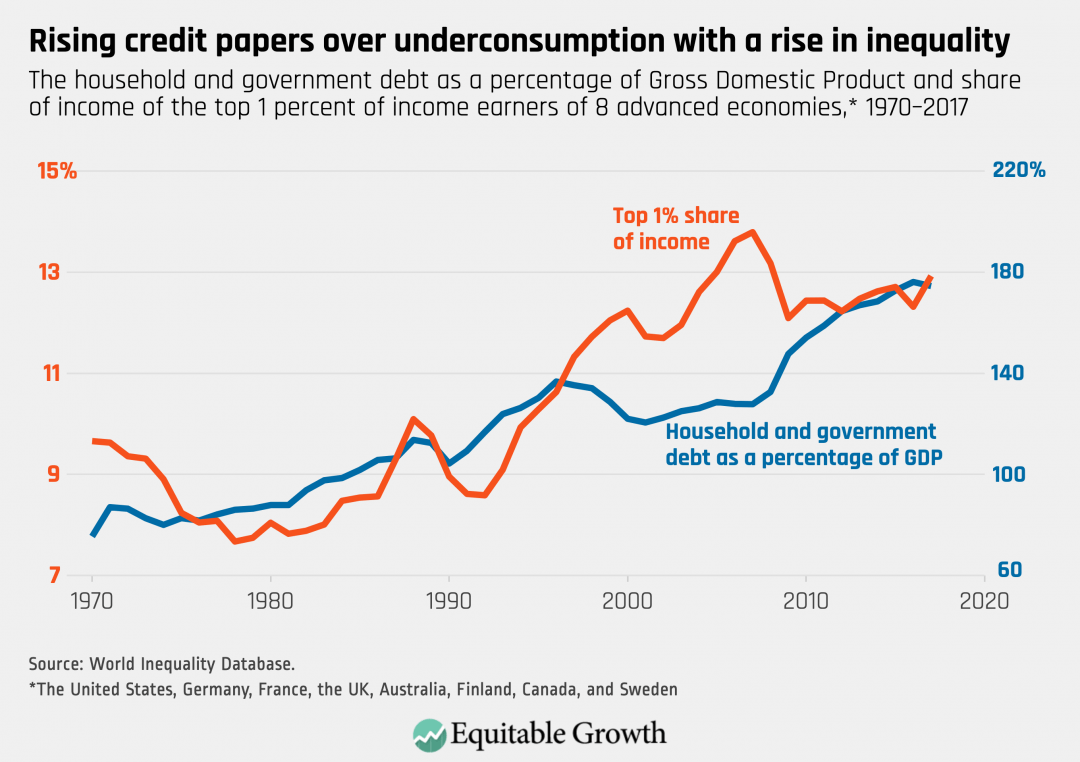

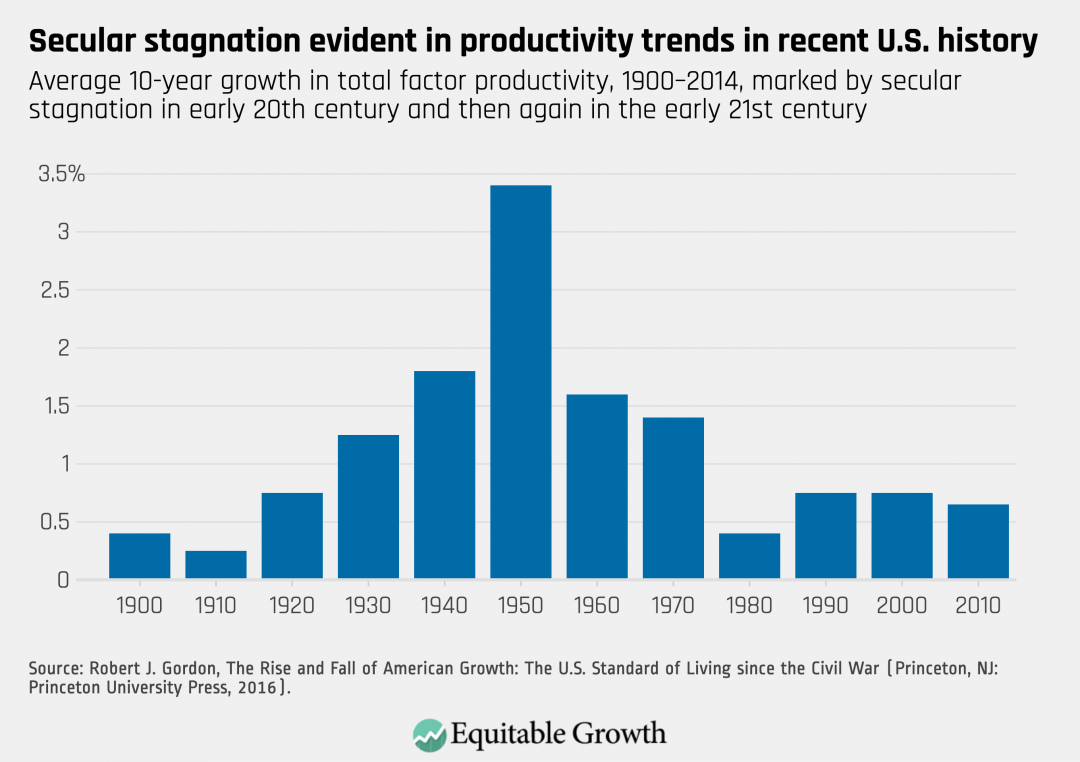

The flow of history, it turns out, delivered up different results. Globalization, the baby boom, the long second industrial revolution, and the 1990s tech boom held off those forces that were expected to decelerate capital investment for several more generations. But today, we are again facing falling population growth rates and slowing technological progress. This means reality has finally caught up with the concept of secular stagnation—an idea before its time. (See Figures 2 and 3.)

Figure 2

Figure 3

Through conversations with his students, Hansen came to realize that the government could stabilize a mature economy with public investment. In the late 1930s, he became an economic evangelist for deficit spending, training a whole cadre of students in what was then the new and exciting Keynesian theory, before he became active in government during World War II.

Among Hansen’s students was Paul Samuelson, the textbook author and Nobel Laureate, and Paul Sweezy, the famous editor of the magazine Monthly Review. Their diagnosis of secular stagnation led them to advocate for the federal government to soak up the excess savings in the U.S. economy and use it to create public goods, from medical care to public housing, from better schools to national parks and beautiful art. They argued that without such public investments, the economy would not be able to expand.

These theorists worried that the masses would look to authoritarian strongmen for salvation in the absence of democratic public investment to generate employment. In 1938, they very presciently warned:

The dangers of delay are strikingly brought home to us by the experience in other lands, where governments which refused to accept the responsibility for the proper functioning of their national economies have in case after case fallen victim to their own inaction, and where, in all too many instances, democracy itself has perished […] Such a dictatorship would revive economic activity, but it would be activity devoted increasingly to producing weapons of death and destruction which must sooner or later be used to plunge the country into a holocaust of slaughter and bloodshed.

The demands of wartime

As the secular stagnation theorists had warned, then came World War II, first in Asia, then Europe, and then to the shores of the United States. World War II changed the matrix of forces in the U.S. economy. Public-private coordination of industrial production guaranteed a level of fixed investment (initially geared toward war production, then to Cold War investments) and higher wages.

Importantly, the justification for big government and overcoming secular stagnation became moralized in the public discourse. Some people were entitled to economic security because of the sacrifices they had made for the nation in war—or so the mainstream political discourse said. A far cry from the robust and universalist democratic state envisioned by theorists of secular stagnation, the wartime political culture that served as a justification of big government also became a limit on its extent.

Central to this compact was the idea that the “soldiers had earned it.” The policy changes enacted at the time signaled a changing view of the relationship between the government and the U.S. economy—one in which public investments in the nation’s human capital development rose to the fore. Yet in practice, many Black soldiers returning from the war were unable to access the benefits afforded to the rest of their fellow GIs. And women who had worked in factories during the war were encouraged to return home to build families during the baby boom.

When these limits to the postwar compact were tested by popular movements in the 1960s, the upshot was not the universalization of economic democracy, but rather a backlash that tightened those limits even further. The Reagan Revolution of the 1980s set the stage for the past 40 years of rising inequality, low investment, and anemic growth.

Today’s secular stagnation theorists are similarly concerned that the lack of sufficient public investments in the U.S. economy makes it very difficult to confront growing economic inequality to boost the wages and wealth of average American families. This time around, history tells us, it will be crucial for the moralization of the state investment project to be universalist from the start, focused on confronting global climate change and all forms of inequality. The failure to respond adequately to the previous economic crisis a decade ago was the precondition for today’s fight against a rising fascist bloc both at home and around the world.

The lessons of the 20th century that are applicable today

Less than a century ago, the chairman of Goldman Sachs, the chairman of the Federal Reserve, and Harvard University’s leading economists all had in common with New Deal politicians their robust sense of the connection between economic inequality, growth, and aggregate demand. These activist intellectuals and policymakers understood that the foundations of stable and sustainable capitalism rested on institutions that secured high pay for workers, channeled investment for public purposes, and pushed past the frailties of mature capitalism.

These are issues that western capitalism is now wrestling with again. It is time for policymakers to rejoin the mainstream of U.S. history, moving beyond the supply-side myths that have increasingly hamstrung the U.S. economy for the past four decades.

—Nic Johnson is a Ph.D. candidate in history at the University of Chicago and is working on a history of American Keynesianism. Robert Manduca is an assistant professor of sociology at the University of Michigan, who researches economic inequality and urban and regional economic development. Chris Hong is a Ph.D. student at the University of Chicago studying the economic and intellectual history of Old Regime France and the Age of Revolutions. The authors are organizers of Reviving Growth Keynesianism, a project that explores the history of American economic thought.