How artificial intelligence uncouples hard work from fair wages through ‘surveillance pay’ practices—and how to fix it

Overview

Workers’ pay is increasingly shaped by opaque algorithms and artificial intelligence systems, shifting compensation decisions away from human managers, clear legal standards, and collective bargaining. This phenomenon—known as algorithmic wage discrimination1 or surveillance pay2—was first documented in app-controlled ride-hail and food-delivery work. Now, it is spreading to a range of other industries and services.

Our first-of-its-kind audit of 500 AI labor-management vendors suggests that traditional employers in industries including health care, customer service, logistics, and retail are now using automated surveillance and decision-making systems to set compensation structures and to calculate individual wages. Without policy interventions, we fear that these practiceswill become normalized, thus growing income uncertainty, entrenching bias, and eroding wage-setting transparency.

Government enforcement agencies and legislators working together should affirmatively and proactively prohibit these practices. This can be done by legislatively proscribing the use of real-time data to automate workforce compensation structures (such as tiered bonuses, performance-based incentives, or penalties for low productivity) and to automate wage calculations. To ensure a positive correlation between hard work and fair pay, wage-setting practices must be, at the very minimum, predictable and scrutable.

In this issue brief, we first examine how surveillance pay practices work and where they are increasingly deployed in the U.S. economy. We then present our audit findings in detail before turning to our policy recommendations to ensure fairness and transparency in setting and calculating workers’ pay.

How surveillance pay practices work and where they are becoming more prevalent in the U.S. economy

Nicole picked up a part-time job with ride-hailing firm Lyft Inc. to help cover her mortgage payments after its adjustable interest rate increased.3 After a long week of working at her regular day job in health care, she would wake up early on Saturday mornings and begin her second job—a ride-hail shift at Lyft. For a few weeks, Nicole would drive until she earned $200—the amount she needed to make her higher loan payment—and then go home.

Within 2 months, however, she found that it took her longer to earn that target amount of $200 per shift. For some inexplicable reason, her daily gig earnings dropped by as much as $50. Though she maintained a high driver score and worked harder than ever, over the course of the following year, Nicole’s Saturday wages dropped an additional $50. To make ends meet, she picked up a shift on Sundays, too. It seemed like the longer Nicole worked for Lyft, the less she earned.

On-demand workers such as Nicole were among the first to experience what has become known as algorithmic wage discrimination, also referred to as surveillance pay: digitalized wage-setting in which firms use large swaths of granular data, including data gathered in real time through automated monitoring systems, to establish compensation brackets and/or to calculate individual pay.4 Studies of on-demand workers dating back to 2016—including those initiated by workers themselves—have found that the introduction of these black box AI systems to determine remuneration has consistently led to low, erratic, and uncertain incomes for app-controlled ride-hail and food delivery workers. Perhaps unsurprisingly, this has led to increased worker stress, higher workplace injury rates, and decreased overall job satisfaction.5

Under surveillance wage systems, different people may be paid different wages for largely the same work, and individual workers cannot predict their incomes over time. These pay practices—especially those that rely on panoptic worker surveillance and on algorithmic intelligence or machine learning systems—invert the deeply held maxim that long, hard work steers toward higher wages and economic security. Not only has pay for app-controlled jobs, such as Nicole’s work for Lyft, decreased over time, suggesting shifting compensation brackets within firms, but industry research also affirms what workers like her have described: People who work longer hours are paid less per hour.

Alarmingly, this uncoupling of hard work and secure, fair pay is no longer just a concern for app-controlled or “gig” workers. The intense pace of funding for AI firms that specialize in labor management has fueled the growth and sale of automated products that aim to increase workforce efficiencies through the introduction of monitoring and decision-making systems that may affect or determine wages. But because software companies do not typically advertise their systems’ abilities to lower labor costs by generating dynamic wage structures and calculations, little is known about these products, their features, and the individual and social implications of their use.

Questions therefore abound. Which sectors of workers are experiencing disruptions in how they are monitored on the job, evaluated for their performances, and paid? What are the capabilities, limitations, and risks of these systems? And, critically, are these systems helping or hurting everyday workers?

To begin to fill this knowledge gap and identify the sectors most likely affected by algorithmic wage discrimination systems, we did a first-of-its-kind analysis of 500 AI vendors who market machine-learning products for use in labor management. Through a risk evaluation of these 500 firms, we then identified a subset of 20 vendors whose products are highly likely to use machine-learning systems to generate surveillance pay. To our knowledge, this is the first systematic study of the spread of algorithmic wage discrimination practices outside of the context of gig work.

Unlike set wages that are determined through contractual negotiations between employers and employees, wages determined by machine-learning systems are generated in real-time, rendering them variable, uncertain, and inscrutable to the workers who rely upon them. Despite this, the vast majority of products in our research detailed below also lacks any transparency or feedback mechanisms. Thus, though the experiences of people such as Nicole were once limited to app-controlled jobs, they are now likely to be spreading to other, more traditional sectors of employment, fundamentally changing the relationship between work and pay for workers across the U.S. labor market.6

The potential impacts of these findings are startling. The spread of surveillance pay practices to critical, human-centered industries such as health care and customer service not only changes the relationship between hard work and fair pay, but also meaningfully alters workplace norms and culture, impacting how patients are treated in hospitals and how consumer problems and interests are addressed. Lessons from research on app-controlled work suggests that the introduction of surveillance pay incentivizes workers to perform in ways that lower labor costs but may de-center patient well-being or customer satisfaction.

The potentially catastrophic consequences, then, of surveillance pay extend far beyond its implications for the insecurity and unpredictability of individual worker pay.

The surveillance pay practices enabled by AI vendors and deployed by their customers

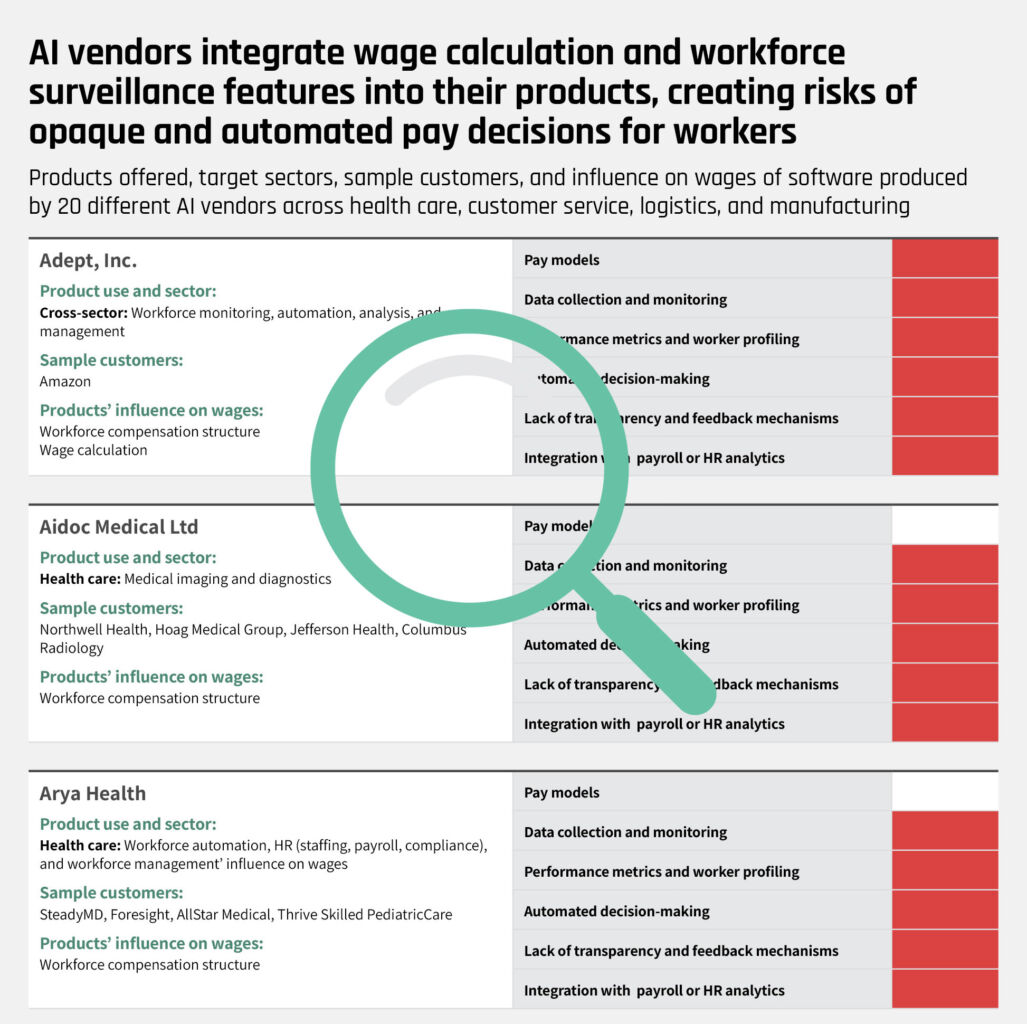

Analyzing the initial 500 AI firms using the parameters listed in our methodology (See Box above), we identified 20 vendors whose products we determined to be at high risk for producing algorithmic wage discrimination. While algorithmic pay practices began in the transportation and delivery sectors, our findings suggest that they have spread to workforce management in a number of industries, most prominently health care, customer service, logistics, and retail.

Of the 20 AI vendors at high risk for enabling surveillance-based wages, five created products specifically for customer serviceworkforce management (including one focused on call centers); four designed products for monitoring and managing workers inhealth care; one focused specifically on workforce management for last-mile delivery in logistics; and one targeted management in the manufacturing sector. The remaining nine vendors developed cross-sector tools that include platforms designed to be integrated across a range of industries, including retail, finance, education, transportation, and technology.

These vendors typically market their products as general-purpose workforce optimization or performance management systems. While not tied to a single sector, they are often deployed in metric and performance-intensive environments and can be integrated with existing HR and payroll systems, thus enabling algorithmic wage-setting across a wide range of workplaces. Overall, these cross-sector tools and platforms create a new standard of workforce surveillance, with plug-and-play solutions for performance monitoring, decision-making, and compensation management across diverse industries.

We found that some vendors embed into their platforms the ability to not only adjust workers’ pay in real time but also steer how pay tiers and bonus structures are set over time. These processes are frequently automated with limited-to-no human review.7 A vast majority of the vendors we reviewed (16 out of 20 firms) also links their products directly into payroll or HR systems. Most products give workers zero visibility into the data or logic behind pay calculations, and only a small subset offer built-in channels for employees to review or contest algorithmic decisions. Finally, we also found that many vendors apply identical performance benchmarks across diverse roles and contexts, ignoring factors such as task complexity, local market conditions, and worker accommodations (in possible violation of disability laws and protections). (See Table 2.)

Table 2

Across the vendors and products we reviewed, we found that all relied on an extensive amount of data collected on and through their workforce and customer markets. Whether the firms quantified subjective metrics, such as performance and customer satisfaction, or whether they aimed to analyze real-time behavior for purposes of allocating tasks or determining quotas, the products all relied on data collection and processing. The systems did not provide a means for workers to access the personal or social data that are collected. They also lacked mechanisms to help workers understand and contest either the accuracy of the data collected or the decisions of the machine-learning systems.

The use of these products to suppress wages and produce variable income is concerning, as are the collateral effects. The collection and storage of these workforce data implicate workers’ privacy, ability to negotiate higher pay in this job or their next one, and their federally protected right to organize their workplaces.

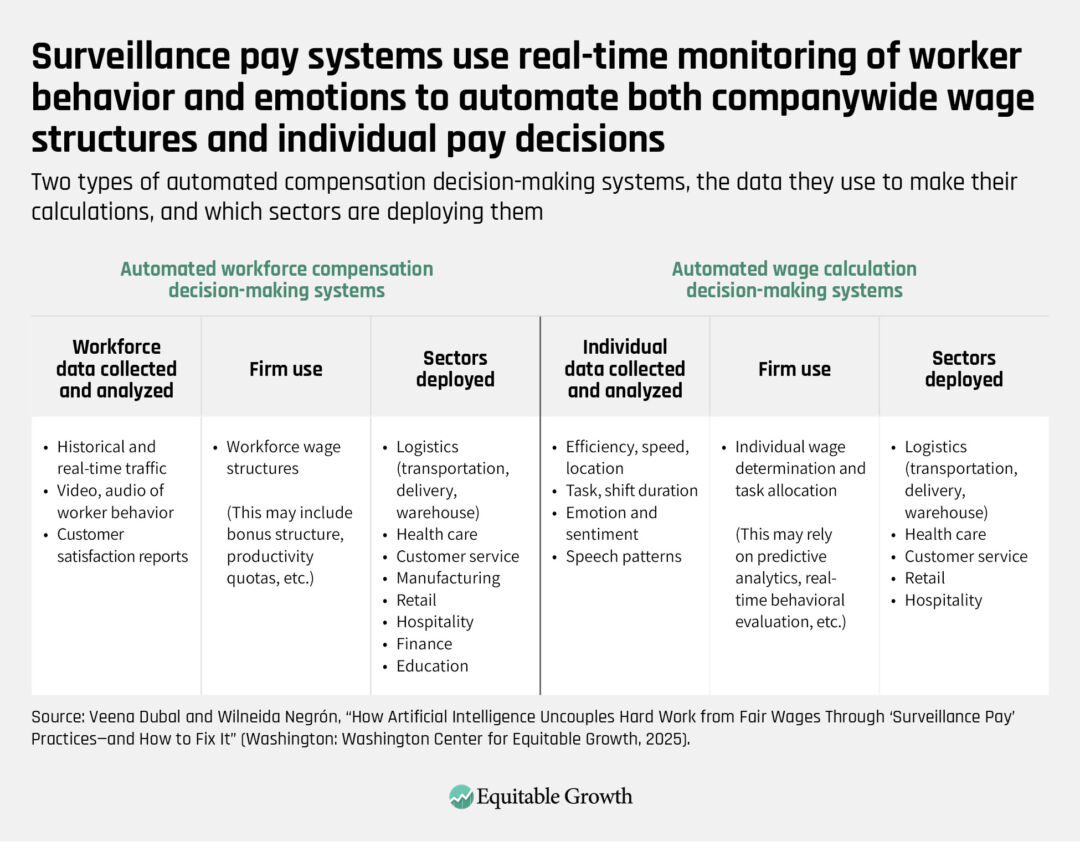

To better understand our findings—and the practices and harms that emerge—we propose a framework to understand surveillance pay systems. As Table 2 above delineates, some of these systems produce frameworks for automated workforce compensation, while others produce automated wage-calculationdecisions, and still others do both. In our taxonomy, automated workforce compensation systemsare those thatcreate dynamic but broad frameworks affecting wage distribution and restrictions across a workforce. These automated systems are less likely to produce day-to-day variability for individual workers, but in so much as they yield wage bands or brackets, we expect that they are a driving factor in workforce wage suppression and wage discrimination.

Similarly, automated wage-calculation systems enable firms to make dynamic wage adjustments based on the system’s machine-learning analysis of an individual’s behavior, temporal and spatial market conditions, and other unknown factors. These systems can be integrated with automated workforce compensation systems. Both together and individually, these processes are likely to exacerbate the impacts of long-term wage stagnation and worker insecurity.

In Table 3 below, we further detail this taxonomy, the types of data both systems may rely on, how the data are used, and in what sectors the tools are currently deployed. The two AI surveillance processes are most likely to overlap when automated compensation structures are used to structure wages, such as worker-pay tiers, base rates, or compensation models based on performance, commissions, fixed or hourly pay, or salary, and where workers are simultaneously rewarded or punished through wage calculations based on a granular analysis of their performance, behaviors, and risk factors. (See Table 3.)

Table 3

Our analysis, for example, suggests that some AI vendors, such as med-tech startups Aidoc Medical Ltd and ARYAHealth, appear to focus more on using machine learning systems shape wage structures. Other AI vendors—among them call center-tech firm Level AI, governance and compliance-focused Cognitiveview, and conversation-analytics firm Uniphore—have developed products with notable workplace decision-making capabilities, including task allocation and performance-based reviews, which very likely result in automated wage calculations.

AI vendors that create and sell products that enforce strict productivity benchmarks, customer satisfaction metrics, and key performance indicators include Insightful, dba Workpuls, Netomi Inc., AssemblyAI, and kore.ai, as well as Level AI and Uniphore. But because these firms’ products are not integrated into metric-based pay systems, we suspect their customers use their products for automated workforce compensation decision-making.

Still other AI vendors, among them Symbl.ai, Betterworks Systems, Inc., and SupportLogic, offer products that enforce productivity benchmarks and key performance indicators and appear to integrate dynamic pay models with real-time metrics, such as performance-based compensation, bonuses, and penalties. Since earnings are frequently adjusted based on performance data, customer interactions, and behavioral analytics, this approach is likely to result in automated wage calculations, resulting in wage variability and unpredictability for workers.

Notably, our research finds that employers across the customer service, finance, manufacturing, computer science, and health care sectors are using these tools, including major U.S. companies such as Intuit Inc., Salesforce Inc., Colgate-Palmolive Co., the Federal Home Loan Mortgage Corp., or Freddie Mac, American Well Corp., and Healthcare Services Group Inc.

How surveillance pay practices match up to existing U.S. labor laws and traditional workplace practices

In the United States, four major federal laws govern wages and working environments. Surveillance pay managed by algorithmic wage systems—both that set workforce structures and that automate wage calculations—may violate the letter and spirit of these laws. The impacts of low and variable wages on workplace safety and the ability of employers to use automated monitoring systems to detect worker organizing and punish it with low pay are instances in which surveillance pay practices may directly violate existing employment and labor laws.

Even in instances where the wage outcomes of automated compensation structures and automated wage calculations do not directly violate minimum wage laws, overtime regulations, labor protections, or anti-discrimination laws, their impacts offend the legislative intent behind wage regulations—namely, the creation of predictable, living wages, in which people are paid equally for equal work. In this section of our issue brief, we describe the federal laws that currently govern wages and the working environments produced through wage-setting practices and detail the ways that algorithmic wage-setting practices that power surveillance pay may be upending these protections and the norms they have produced.

Minimum wages and compensable time on the clock

The most widely known federal work law governs how long workers labor and how little they can be paid. In response to labor agitation created by widespread unemployment and poverty during the Great Depression nearly 100 years ago, Congress passed the Fair Labor Standards Act, a centerpiece of the New Deal legislation of the 1930s. The law sets minimum wages, overtime, and record-keeping laws and sets the floor (not the ceiling) for wages across the country. The federal minimum wage is set by Congress and has been a staggeringly low $7.25 per hour since 2009. In the face of congressional inaction and changing standards of living, many states and local municipalities have periodically passed legislation to set and raise the local minimum wage to near living wage levels of about double that amount.

Algorithmically determined wage structures and calculations do not necessarily violate minimum wage laws, but, to date, they have been used by firms to make it more difficult to identify violations and to enforce the law. In app-controlled ride-hail and food-delivery work in the United States, for example, firms have maintained that their workers are only entitled to pay after work is sent to them, not when they are waiting for work. This piece-pay practice disconnects time and pay, making it impossible for individual workers to know how much they might earn for work over any period of time.8

The Federal Labor Standards Act abolished piece-pay in many industries. Yet these algorithmic wage structures and calculations raise the problem anew by setting wages that are variable and difficult for workers and enforcement agencies to decipher.

What’s more, some of the firms that use digitalized piece-pay practices are trying and succeeding at amending state laws to make it legal to discriminate between workers in this way. In California, for example, the ride-hail and food-delivery firms with the largest market shares—Uber Technologies Inc., Lyft Inc., DoorDash Inc., and Maplebear Inc.’s Instacart—sponsored and won a 2020 referendum9 to cement their algorithmic wage discrimination practices into state law. Under the law, workers laboring for so-called transportation and delivery network companies are formally exempt from state minimum wage and hour protections and instead guaranteed 120 percent of the minimum wage of the area in which they are working—but only after they have been disseminated work, not while they are awaiting this work.

After this firm-sponsored law was enacted in 2020, a worker-led study found that Uber and Lyft drivers in California were making $6.20 per hour, with an hourly wage floor of about $4.20.10 In contrast, in 2025, the California minimum wage is $16.50 per hour.

Even the study’s authors were surprised by the low algorithmically determined earnings because they said that most drivers work until they hit a certain wage target, not accounting for the tolls, airport fees, gas, insurance, and vehicle depreciation costs that they incur in the process. And, in another California worker-led experiment, Uber and Lyft drivers themselves affirmed what they had long suspected—that they were being allocated different wages for the exact same work, a topic we turn to in the next section.11

Together, automated workforce compensation and calculation systems violate the spirit of local minimum wage laws and may also violate the Fair Labor Standards Act. In practice, the California model exists in many other states where local legislatures have cemented the status of ride-hail and food-delivery workers as “independent contractors” for the purposes of state law. But state laws do not preempt FLSA obligations. As of the time of writing, the federal government has not initiated an enforcement action in any state.

Equal pay for equal work

In response to social and labor movements and persistent age, disability, gender, and race-based wage gaps, Congress has passed laws over the past seven decades that, in theory, guarantee that workers doing broadly similar work earn the same wages for that work. The idea of “equal pay for equal work” emerged from the women’s movement and civil rights movement in the 20th century, and can be found most clearly in Title VII of the Civil Rights Act of 1964, the Age Discrimination in Employment Act of 1967, the Equal Pay Act of 1963, and the Americans with Disability Act of 1990.

Together, these laws prohibit differential pay becauseof race, color, religion, sex, national origin, age, or disability. They have been notoriously difficult to enforce, and identity-based wage gaps remain persistent in the U.S. labor market. Still, they create baseline aspirations for wage-setting in the labor market.

Algorithmic wage practices often violate the spirit of “equal pay for equal work” laws, and in some cases, they may also be violating the letter of the law. Indeed, wage-calculating AI is rooted in pay discrimination—differentiating between workers on the basis of any number of known and unknown factors, including, as discussed above, predictive analytics that attempt to determine a worker’s potential tolerance for low pay.

While some AI vendors claim their products enabling surveillance pay using their algorithmic wage-calculation software are based on objective analysis of workers’ performance, their systems often do not provide mechanisms for workers’ feedback or share clear information on how workers are evaluated in relation to their pay. Anecdotal evidence suggests that algorithmic performance analysis may also produce uncorrectable errors, resulting in unfair outcomes.

One case in point was included in the Biden administration’s “Blueprint for an AI Bill of Rights.” The White House Office of Science and Technology included the following example in the blueprint’s Safe and Effective Systems section: “A company installed AI-powered cameras in its delivery vans in order to evaluate the road safety habits of its drivers, but the system incorrectly penalized drivers when other cars cut them off or when other events beyond their control took place on the road. As a result, drivers were incorrectly ineligible to receive a bonus.”12

In turn, these undetectable and unfair errors may have devastating impacts on a worker’s ability to put food on the table or to pay rent for their homes. Studies suggest that low-income workers rarely contest wage violations in offline labor markets. If the wage errors themselves are inscrutable, then, by extension, workers in online labor markets would be even less inclined to do so.

These practices—in addition to resulting in discriminatory wages between any two workers doing broadly similar work at the same time and in the same way—may also exacerbate existing identity-based wage inequalities. Though Uber rarely shares pay data with third parties, research produced alongside Uber’s own chief economist, Jonathan Hall, found that “although neither the pay formula nor the dispatch algorithm for assigning riders to drivers depend on a driver’s gender,” women working for Uber make roughly 7 percent less than men.13 As one of us has argued elsewhere, “On its own terms, the publication of this finding signals a troubling moral shift in how firms understand the problem of gender discrimination and their legal responsibility to avoid it.”14

Collective organizing and bargaining

The National Labor Relations Act of 1935 created a nationwide industrial system in which collective worker organizing became a protected right for most workers and collective bargaining became systematized through national oversight.15 The law resulted both in the growth of labor power and, over time, a decrease in industrial unrest. Notably, at the behest of Southern Democrats at the time, who hoped to maintain the racialized nature of Southern plantation economies, the law excluded both domestic and agricultural workers from its protections, and Congress later also included a carveout for independent contractors.16

African American civil rights advocates at the time objected to the exclusion of these largely Black and minority workforces, understanding this exclusion as having devastating impacts on any post-Civil War gains made by these workers. They maintained that lower wages for Black workers would, in effect, “relegate … [African American workers] into a low wage caste,”17 and “destroy any possibility of ever forming a strong and effective labor movement.”18

The use of wage-calculating algorithms to differentially set wages in sectors comprised largely of workers of color, as is the case with platform-enabled ride-hail and food-delivery firms, has indeed had the impact of not only creating a second tier of wages—or, as one of us has called it, “a new racial wage code”—but it has also disrupted efforts at collective organizing. As one of our studies that explores the wage experiences of Uber drivers found, “The fact that different workers made different amounts for largely the same work was a source of grievance defined through inequities that often pitted workers against one another, leaving them to wonder what they were doing wrong or what others had figured out.”19 This, in turn, may be used as an employer tactic to violate the spirit of the National Labor Relations Act, making it more and more difficult for workers to collectively organize.

Additionally, algorithmic wage discrimination may be used to retaliate against workers for protected union-organizing activities. A union of Japanese workers, for example, has alleged that algorithmic wage-setting software has been used to target union organizers, effectively punishing those workers with lower wages.20 Of course, the nature of the black box systems that firms use to set wages and wage structures make it difficult to understand or to challenge an outcome such as this. In the United States, the use of an algorithmic wage-setting system to punish organizers would be unlawful retaliation under the National Labor Relations Act.

Safety and health standards at work

Workers in the United States have the broad and often-unrealized right to safe and healthy workplaces under the Occupational Safety and Health Act of 1970. Extant empirical evidence from around the globe suggests that algorithmic wage practices utilized by firms have resulted in high rates of psychosocial and physical injuries.21 These outcomes can be traced, in part, to the way workers are paid through algorithmic wage structures and setting systems.

Cornell University’s Worker Institute and the Worker’s Justice Project/Los Deliveristas Unidos together found that in New York City, 42 percent of delivery workers “reported non-payment or underpayment of their wages, with almost no recourse because of how the firms control them through digital machinery.”22 The under and non-payment of wages set and distributed by algorithms result in workers laboring in ways that make them vulnerable to risk-taking and injury. As one of us has argued elsewhere:

[T]he launch of ride-hailing companies in cities has been associated with a three-percent increase in the number of traffic fatalities. Working long and hard, with low wages and little predictability… may give rise to workplace dangers, including more crashes. A joint study of the California Public Utilities Commission and the California Department of Insurance found that ride-hailing accidents in that state alone generated 9,388 claims that resulted in a combined $185.6 million loss in 2014, 2015, and 2016.23

The low and unpredictable wages created through surveillance pay practices powered by these algorithmic systems can also result in emotional injuries. The fear of not earning enough, to accept all work without fail because not doing so will result in lower wages or termination, and working at great speeds to earn more can all cause psychosocial injuries. In a large study of workers who received algorithmically set wages in the European Union, researchers “found that workers suffered high rates of depression relative to other jobs.”24

Federal laws and some state protections place the responsibility on employers to create safe and healthy workplaces, but algorithmic wage-setting systems create new health and safety problems that have yet to be addressed robustly at the state or federal level.

Recommendations to ensure surveillance pay practices do not harm workers and depress their earnings

As detailed above, algorithmic wage discrimination practices are proliferating across an increasing number of sectors in the U.S. economy. The large body of evidence produced by researchers who study these practices in on-demand work settings suggest that firms that use these systems may risk violating the existing employment and labor protections detailed in the previous section. The practices may also violate antitrust and consumer laws.25

Yet, to date, few agencies have attempted to enforce these laws at the federal or state level. Opportunities to do so remain, however, even if wages do not fall below the minimum wage or state agencies do not have data to determine a violation of anti-discrimination laws. Why? Because if surveillance pay practices lend themselves to health and safety violations—for example, by incentivizing workers to labor longer in ways that cause physical and psychological injuries—then state and municipal agencies may enforce existing health and safety protections.

With the extraordinary speed of production of AI for workforce management, and the implications of algorithmic wage-setting practices across the U.S. labor market, we believe that both state and federal legislators are well-situated to step in. One approach, for which we advocate here, is to craft legislation to ban the use of real-time data to both automate workforce compensation structures and to automate wage calculations. Wage-setting has traditionally been transparent—at least to the workers receiving said wages—and the new opacity of wage structures and wage calculations creates new and alarming harms.

A second, less comprehensive approach, is for legislators to focus specifically on banning the use of automated wage calculation systems. Such laws should extend both to employers and hiring entities who use independent contractor labor and should apply to wages for all the time that their employees work and wait for work. In this context, providing for both private and public enforcement will make firms more reticent to engage in these practices.

Critically, we argue that legislation mandating transparency of algorithmic wage-setting systems alone will not do enough to mitigate these harms. Existing case studies of workers attempting to use their General Data Protection Regulation rights in the European Union to understand wage and termination practices underscore transparency’s limitations. These workers in the EU argue that even when they are given explanations for how they are paid, the systems change too frequently to effectively use this information to create income predictability. They also generally lack the power and technical expertise to use data releases to locate and correct violations of practice or law.26

Conclusion

Scholars, policy analysts, lawmakers, and labor advocates alike have raised significant concerns about the immediate and long-term financial insecurity of workers in the United States. Observers have identified macroeconomic trends, including historical wage stagnation,27 inflation’s effects on real wages,28 and widening structural and geographical wage disparities,29 as contributors to national problems of precarity and immobility. Despite significant productivity growth since the 1970s, most U.S. workers’ wages have stagnated, leading to a significant productivity-pay gap.30 This means workers have not benefited from productivity and efficiency gains arising from the growing use of technology for labor management.

More recently, these structural challenges have been compounded by the growing discretionary use of novel technologies at work. Developments in artificial intelligence and other digital machinery have led to well-documented problems, including worker displacement,31 reduced job quality,32 and the perpetuation of bias and discrimination in the labor market.

Across the political spectrum, workers and politicians agree that hard work should be positively correlated with fair pay. This essay has identified the empirical reality that machine-learning systems that very likely uncouple fair pay and hard work, leading to wage uncertainty, discrimination, and suppression, are rapidly spreading across the U.S. labor market. This should alarm analysts and lawmakers concerned about economic inequality.

The American dream—however fraught and unreachable for many—is directly under attack. In this moment of income insecurity for most U.S. workers, legislators across the aisle should be motivated to write laws at both the federal and state level to address the problems endemic to the algorithmic wage-setting systems that power inequitable surveillance pay practices.

About the authors

Veena Dubal is a professor of law and legal anthropologist at the University of California Irvine School of Law. Her research focuses broadly on law, technology, and precarious workers, combining legal and empirical analysis to explore issues of labor and inequality.

Wilneida Negrón is a political scientist, technologist, and strategist whose work bridges labor rights, emerging technology, and public policy. As the architect behind field-defining initiatives on workplace surveillance and ethical innovation, she has shaped how funders, organizers, and policymakers address the future of work and tech accountability.

Did you find this content informative and engaging?

Get updates and stay in tune with U.S. economic inequality and growth!

End Notes

1. Zephyr Teachout, “Algorithmic Personalized Wages,” Politics and Society 51 (3) (2023), available at https://journals.sagepub.com/doi/10.1177/00323292231183828; Veena Dubal, “On Algorithmic Wage Discrimination,” Columbia Law Review 123 (7) (2023), available at https://columbialawreview.org/content/on-algorithmic-wage-discrimination/. Although the use of automated machinery to set wages was first theorized in these papers by Zephyr Teachout and Veena Dubal, respectively, it had long been identified and debated by scholars and workers alike. See Coworker.org,“Data Shows Shipt’s ‘Black-Box’ Algorithm Reduces Pay of 40% of Workers,” October 15, 2020, available at https://home.coworker.org/data-shows-shipts-black-box-algorithm-reduces-pay-of-40-of-workers/; Dana Calacci, “The GIG Workers Who Fought an Algorithm: When Their Pay Suddenly Dropped, Shipt’s Delivery Drivers Dug into the Data,” IEEE Spectrum 61 (8) (2024): 42–47, available at https://pure.psu.edu/en/publications/the-gig-workers-who-fought-an-algorithm-when-their-pay-suddenly-d; Niels Van Doorn, “From a Wage to a Wager: Dynamic Pricing in the Gig Economy” (2020), available at https://autonomy.work/wp-content/uploads/2020/09/VanDoorn.pdf.

2. AI Now Institute and others, “Prohibiting Surveillance Prices and Wages” (2025), available at https://towardsjustice.org/wp-content/uploads/2025/02/Real-Surveillance-Prices-and-Wages-Report.pdf.

3. Nicole was interviewed by one of the authors as part of a larger research project.

4. Dubal, “On Algorithmic Wage Discrimination.”

5. Veena Dubal and Vitor Araújo Filgueiras, “Digital Labor Platforms as Machines of Production,” Yale Journal of Law & Technology 26 (2023), available at https://yjolt.org/digital-labor-platforms-machines-production.

6. In a recent nongig example, company LuLaRoe quietly overhauled its monthly bonus structure—raising qualification thresholds, shortening payout windows, and changing sales metrics—without warning or input, prompting their employees to launch a Coworker.org protest petition. Many of the consultants who signed the petition stressed that they depend on these bonuses to feed their families and support their teams, and now face sudden income drops and unpredictable earnings with no internal appeals process. See “Lularoe – Please Reconsider Recent Changes to the Bonus Structure,” available at https://www.coworker.org/petitions/lularoe-please-reconsider-recent-changes-to-the-bonus-structure (last accessed Jully 2025).

7. Jill Barth, “Payroll’s big technology shift: From ‘printing checks’ to strategic force,” HR Executive, May 1, 2025, available at https://hrexecutive.com/payrolls-big-technology-shift-from-printing-checks-to-strategic-force/.

8. This amounts to a form of algorithmically determined piecework. Piecework was largely abolished by the Federal Labor Standards Act, but vestiges of the practice remain legalized in case law. For determining what is “compensable time,” see Veena Dubal, “The Legal Uncertainties of Gig Work.” In Guy Davidov, Brian Langille, and Gillian Lester, eds., The Oxford Handbook of the Law of Work (Oxford, UK: Oxford University Press, 2024), pp. 801–803.

9. Faiz Siddiqui and Nitasha Tiku, “Uber and Lyft used sneaky tactics to avoid making drivers employees in California, voters say. Now, they’re going national,” The Washington Post, November 17, 2020, available at https://www.washingtonpost.com/technology/2020/11/17/uber-lyft-prop22-misinformation/.

10. National Equity Atlas, “Uber and Lyft promised drivers good pay, benefits, and flexibility with California’s Prop 22. What drivers got were poverty wages” (n.d.), available at https://nationalequityatlas.org/prop22-paystudy-summary.

11. More Perfect Union, “Video: We Put 7 Uber Drivers in One Room. What We Found Will Shock You,” September 9, 2024, available at https://www.youtube.com/watch?v=OEXJmNj6SPk.

12. The White House, “Blueprint for An AI Bill of Rights” (2022), available at https://bidenwhitehouse.archives.gov/wp-content/uploads/2022/10/Blueprint-for-an-AI-Bill-of-Rights.pdf.

13. Dubal, “Algorithmic Wage Discrimination,” 1959, referencing Cody Cook and others, “The Gender Earnings Gap in the Gig Economy: Evidence From Over a Million Rideshare Drivers,” The Review of Economic Studies 88 (5) (2021), pp. 2210–2238, available at https://academic.oup.com/restud/article-abstract/88/5/2210/6007480?redirectedFrom=fulltext&login=false.

14. Ibid.

15. National Labor Relations Act (NLRA) 29 U.S.C. §§ 151-169.

16. Veena Dubal, “Wage slave v. Entrepreneur: Contesting the Dualism of Legal Worker Identities.” California Law Review (2017), 65-123, available at https://repository.uclawsf.edu/cgi/viewcontent.cgi?article=2595&context=faculty_scholarship.

17. Veena Dubal, “The New Racial Wage Code,” Harvard Law and Policy Review 15 (2021), p. 523, available at https://journals.law.harvard.edu/lpr/wp-content/uploads/sites/89/2022/05/3-Dubal.pdf.

18. Ibid, p. 523.

19. See, Dubal, “Algorithmic Wage Discrimination, at 1963-64.

20. Ibid, p.1941, FN 39.

21. See, generally, Dubal and Araújo Filgueiras, “Digital Labor Platforms as Machines of Production.”

22. Ibid, p. 588.

23. Ibid., pp. 589–90.

24. Ibid, p. 584, citing Pierre Bérastégui, “Exposure to psychosocial risk factors in the gig economy: a systematic review” (Brussels: European Trade Union Institute, 2021), available at https://www.etui.org/sites/default/files/2021-01/Exposure to psychosocial risk factors in the gig economy-a systematic review-web-2021.pdf.

25. AI Now Institute and others, “Prohibiting Surveillance Prices and Wages.”

26. Veena Dubal, “Data Laws at Work,” Yale Law Journal 134 (2025), available at https://www.yalelawjournal.org/forum/data-laws-at-work.

27. Lawrence Mishel, Elise Gould, and Josh Bivens, “Wage Stagnation in Nine Charts” (Washington: Economic Policy Institute, 2015), available at https://www.epi.org/publication/charting-wage-stagnation/.

28. Wendy Edelbert and Noadia Steinmetz-Silber, “Has pay kept up with inflation?” (Washington: Brookings Institution, 2025), available at https://www.brookings.edu/articles/has-pay-kept-up-with-inflation/.

29. Kevin Rinz, “What is going on with wage growth in the United States?” (Washington: Washington Center for Equitable Growth, 2024), available at https://equitablegrowth.org/what-is-going-on-with-wage-growth-in-the-united-states/.

30. Economic Policy Institute, “The Productivity-Pay Gap” (2025), available at https://www.epi.org/productivity-pay-gap/.

31. Harry J. Holzer, “Understanding the impact of automation on workers, jobs, and wages” (Washington: Brookings Institute, 2022), available at https://www.brookings.edu/articles/understanding-the-impact-of-automation-on-workers-jobs-and-wages/.

32. Larry Liu, “Job quality and automation: Do more automatable occupations have less job satisfaction and health?” Journal of Industrial Relations 65 (1) (2022), available at https://journals.sagepub.com/doi/full/10.1177/00221856221129639.

Stay updated on our latest research