Slides from a presentation by Michael Kades for the House Antitrust Caucus on January 19, 2018. In the presentation, Kades explains that antitrust enforcers lack the resources to protect consumers and promote competition during the current merger wave, which likely has contributed to increases in concentration and monopoly power.

Category: Innovation & Entrepreneurship

Product innovations and inflation in the U.S. retail sector have magnified inequality

Do product innovations affect economic inequality? In a new working paper published today, I find that shifts in income distribution in the United States lead to product innovations that target high-income households, which increases purchasing-power inequality. Such product innovations have both a direct effect on purchasing power across income groups because they target specific groups, as well as an indirect effect through competition with products already in the marketplace.

In short, wealthier households are more likely to spend on product categories where product innovations are more common and where competition is increasing, while low- and middle-income households are more likely to purchase products that face less competitive pricing pressures in the marketplace. For economic policymakers, this dynamic has important implications for the price indexation of government programs that provide support for low- and middle-income families.

New Working Paper

The unequal gains from product innovations: Evidence from the US retail sector

Here’s the new fact

In the first part of my analysis, I measure how the introduction of new products and price changes on existing products affect economic inequality in a setting where very detailed data are available. I examine scanner data recorded at cash registers in the U.S. retail sector between 2004 and 2015—covering broad product categories, including food, alcohol, beauty and health, general merchandise, and household supplies, all of which account for 15 percent of household expenditures. I find that product categories predominantly consumed by high-income households—such as organic food, craft beer, and branded drugs—feature higher levels of product innovations (measured by entry of new products) and lower levels of inflation (measured using price changes on already existing products).

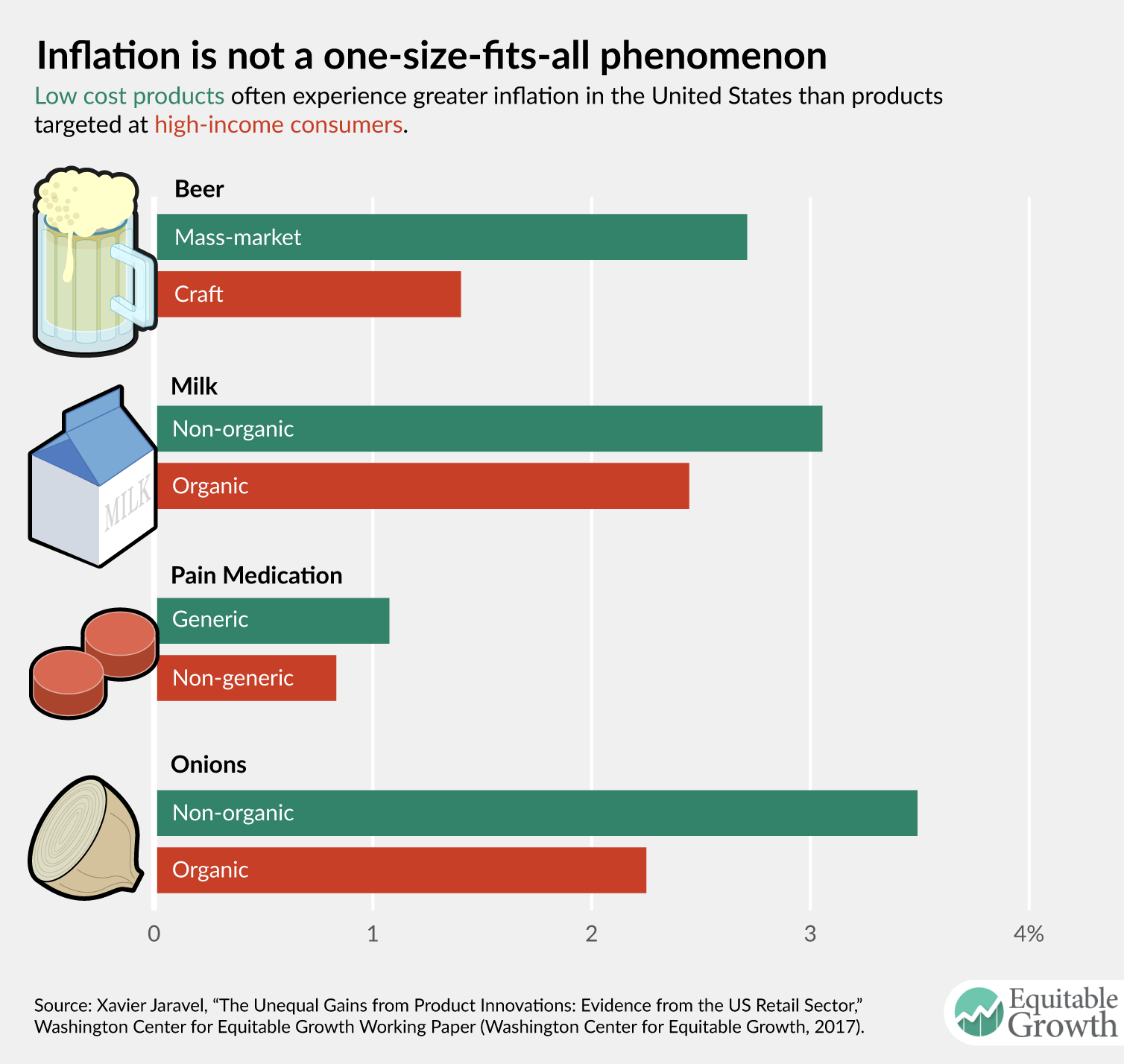

The accompanying infographic charts how this dynamic plays out in the marketplace for four everyday consumer products. (See Figure 1.)

Figure 1

Taking into account the 3 million products in the database, these price effects are large. In the U.S. retail sector, annual inflation was 0.65 percentage points lower for households earning more than $100,000 per year, relative to households making less than $30,000 per year. The current methodology used by statistical agencies, including the U.S. Department of Labor’s Bureau of Labor Statistics, cannot capture this difference, which arises primarily because income groups differ in their spending patterns along the quality ladder within detailed item categories. (BLS currently collects data measuring income-group-specific spending patterns across broad item categories, leading to aggregation bias.)

Explaining the new fact

In the second part of my analysis, I find that the patterns of product innovations and inflation inequality in the U.S. retail sector resulted from the response of firms to so-called market-size effects. Specifically:

- The demand for products consumed by high-income households increased because of growth and rising income inequality.

- In response, firms introduced more new products catering to such households.

- As a result, already existing products in these market segments lowered their price due to increased competitive pressure.

To establish empirically the causal effect of increasing demand on firms’ product innovations, I study the effect of changes in demand resulting from shifts in the national income and age distributions over time. I find that increasing demand in a market segment leads to the introduction of more new products and lower inflation for already existing products due to increasing competitive pressure. For instance, in the case of craft beer, new varieties of craft beer are constantly being introduced, and this increase in competition keeps inflation low for existing varieties of craft beer, while competition is more stable and inflation is higher for more longstanding products in the market such as mass-produced beer.

Implications

The results of the analysis suggest that two trends are at work in the U.S. economy today. First, economic inequality has increased faster than is commonly thought because of the dynamics of product innovations and inflation. And second, rising income inequality has an amplification effect because when high-income households get richer, firms strategically introduce more new products catering to these consumers, which increases inequality further.

One limitation of the analysis is that it primarily covers the U.S. retail sector only from 2004 to 2015. Yet I find a similar trend of lower inflation for higher-income households when considering the full consumption basket of U.S. households going back to 1953 using data from the Consumer Price Index and the Consumer Expenditure Survey.

Moreover, the results from the U.S. retail sector have direct implications for the indexation of various government safety-net programs that are currently indexed to the food Consumer Price Index such as the U.S. Department of Agriculture’s Supplemental Nutrition Assistance Program (also known as food stamps). Based on the sample of goods examined in my research, I find that indexation of the benefits on the food Consumer Price Index implies an increase in nominal food stamp benefits of 24.8 percent between 2004 and 2015. In contrast, indexation of the benefits on the relevant food price index for households eligible for supplemental nutrition assistance would imply a much higher increase of 35.5 percent because these households effectively face a higher food inflation rate.

—Xavier Jaravel is a post-doctoral fellow in economics at Stanford University.

Download File031417-jaravel-product-innovation

Equitable Growth in Conversation: an interview with the OECD’s Stefano Scarpetta

“Equitable Growth in Conversation” is a recurring series where we talk with economists and other social scientists to help us better understand whether and how economic inequality affects economic growth and stability.

In this installment, Equitable Growth’s executive director and chief economist Heather Boushey talks with Stefano Scarpetta, the Director of Employment, Labour and Social Affairs at the Organisation for Economic Cooperation and Development in Paris, about how high levels of inequality are affecting economic growth in the organization’s 35 member countries and possible policy responses.

Read their conversation below

Heather Boushey: The OECD has been doing really interesting work over the past few years looking at how and whether inequality affects macroeconomic outcomes or outcomes more generally. A lot of questions are circling around the report that came out last year titled “In It Together: Why Less Inequality Benefits All.” One thing that really strikes me is that inequality isn’t a unitary phenomenon. It’s not just one thing. You can have inequality increasing at the top of the income spectrum, you can have something happening in the middle, you could have something happen in the bottom. But these are different trends and they may affect economic growth and stability in different ways because of their effects on people or on consumption or on what have you. That’s one of the things that I really enjoyed about that OECD report is that you used multiple measures of inequality; you didn’t just stick with one. So, I wondered if you could talk a little bit about that.

Stefano Scarpetta: Sure. Some indicators of income inequality, such as the Gini coefficient, of course don’t tell us exactly what’s happening with different income groups. So, much of the work we have done at the OECD is to look at the income performance of different groups along the distribution. And one of the findings that is emerging, not just in the United States but also across a wide range of advanced and emerging economies, is that income for those at the top 10 percent, and in many cases actually the top one percent, has been growing very rapidly. At the same time the income of those on the bottom—not only the bottom 10 percent but actually sometimes the bottom 40 percent—has been dragging, if not declining, in some countries.

This fairly widespread trend becomes very important when we think about policies to address inequalities, but also when you want to look at the links between inequality and economic growth. As you know, there are at least two broad strands of theory about the links between inequality and growth. A traditional theory focuses on economic incentives. Some inequality, especially in the upper part of the distribution, is needed to provide the right incentives for people to take risk, to invest, and innovate. An alternative theory instead focuses on missing opportunities associated with high inequality: it focuses more on the bottom part of the distribution, and stresses that high inequality might actually prevent those at the bottom of the distribution from investing in, say, human capital or health, thus hindering long-term growth..

We have done empirical work looking at the links between inequality and economic growth. This is very difficult, and though we have used some state-of-the-art economic techniques, we still have a number of limitations. We have looked at 30 years of data across a wide range of OECD countries. And basically, the bottom line of this analysis is that there seems to be clear evidence that when inequality basically affect the bottom 40 percent [of the income distribution], then this leads to lower economic growth. These results are fairly robust, but what’s the mechanism behind this effect? That’s what we’ve been doing as well.

Potentially there are different types of mechanisms. We have investigated one of them in particular: the reduced opportunities that people in the bottom part of income distribution have to invest in their human capital in high-unequal countries. The OECD has coordinated the Adult Skills Survey, which assesses the actual competencies of adults between the ages of 16 and 65 in 24 countries along three main foundation skills: literacy, numeracy and problem solving. Thus the survey goes beyond the qualification of individuals and allows us to link actual competencies with their labor market status. The survey actually measures what people can do over and above their qualifications. Then we looked at whether educational outcomes, not only in terms of qualifications but also actual competencies, are related to the socioeconomic backgrounds of the individuals themselves, and also whether the relationship between the socioeconomic backgrounds of individuals and the education outcomes vary depending on whether the individuals live in low- or high-inequality countries.

What emerges very clearly from the data—and these are micro data of a representative sample of 24 countries—is that there is always a significant difference or gap in the educational achievement of individuals, depending on their socioeconomic background. So individuals coming from low socioeconomic backgrounds tend to have worse outcomes whether we measure them in terms of qualifications, or even more importantly in terms of actual competencies — actual skills, if you like. The interesting result is that the gap tends to increase dramatically when we move from a low-inequality to a high-inequality country. The gap tends to be much, much bigger. The difference in the gap between those with intermediate or median socioeconomic backgrounds and those with high socioeconomic backgrounds is fairly stable across the income distribution level of inequality of these countries. But when we look at the highly unequal countries there is a drop, particularly among those coming from low socioeconomic backgrounds

One way to interpret this is that if you come from a low socioeconomic background, your chances of achieving a good level of education are lower. But if you are in a high-unequal country, the gap tends to be much, much larger compared to those coming from an intermediate or high socioeconomic background. And the reason is that in high-unequal countries it’s much more difficult for those in the bottom 10 percent —and indeed the bottom 40 percent— to invest enough in high-quality education and skills.

HB: That’s very consistent with research by [Stanford University economist] Raj Chetty and his coauthors in the United States on economic mobility, noting in U.S. parlance that the rungs of the ladder have become farther and farther apart. Would you say that these are consistent findings? One thing inequality does is it makes it harder to get to that next rung for folks at the bottom or even the mid-bottom of the ladder.

SS: Precisely, that’s exactly the point. The comparisons of the gap in educational achievements between individuals from different socio-economic backgrounds across the inequality spectrum suggest that the gap widens when you move from qualifications to actual competencies. So, basically, it’s not just a question of reaching a certain level of education, but actually the quality of the educational outcomes you get. In highly unequal countries, people have difficulty not only getting to a tertiary level of education, but also accessing the quality of the education they need, which shows very clearly in terms of what they can do in terms of literacy, numeracy and problem solving. So, yes, high inequality prevents the bottom 40 percent from investing enough in human capital, not only in terms of the number of years of education, but also in terms of acquiring the foundation skills because of the adverse selection into lower quality education institutions and training.

HB: I want to step back just for a moment. To summarize, it sounds like the research that you all have done finds that these measures of inequality are leaving behind people at the bottom and that affects growth. Is there anything in your findings that talked about those at the very top, the pulling apart of that top 1 percent or the very, very top 0.1 percent? What did your research show for those at the very top?

SS: It’s well known that the top one percent, actually the top 0.1 percent, are really having a much, much stronger rate of income growth than everybody else. This takes place in a wide range of countries, not to the same extent, but certainly it takes place.

The other descriptive finding we have is that for the first time in a fairly large range of OECD countries, we have comparable data on the distribution of wealth, not just the distribution of income. And what that shows is that there is much wider dispersion of the distribution of wealth, which actually feeds back into our discussion about investment in education because what matters is not just the level of income, but actually the wealth of different individuals and households.

Just to give you a few statistics: in the United States the top 10 percent has about 30 percent of all disposable income, but they’ve got 76 percent of the wealth. In the OECD, on average, you’re talking about going from 25 percent of income to 50 percent of wealth. So, basically, the distribution of wealth adds up to create a deep divide between those at the top and those at the bottom. The interesting thing is that there are differences in the cross-country distribution of income and wealth. Countries that are more unequal in terms of income are not necessarily more unequal in terms of distribution of wealth. The United States is certainly a country that combines both, but there are a number of European countries, such as Germany or the Netherlands, that tend to be fairly egalitarian in terms of distribution of income, but much less so when you look at distribution of wealth.

I think that going forward, research has to take the wealth dimension into account because a number of decisions made by individuals rely of course on the flows of income, but also in terms of the underlining stock of wealth that is available to them.

Going back to your question: the fact that the top 10 percent is growing much more rapidly does not necessarily have negative impact on economic growth. What really matters is the bottom 40 percent, which as I said before justifies our focus on opportunities and therefore on education. And the work we are doing now at the OECD extends our analysis to look at access to quality health services. My presumption is that individuals in the bottom 40 percent, especially in some countries, might be not as able as others to access quality health. And therefore, this also affects the link between health, productivity, labor market performance, and so on. This might also be an effect that becomes a drag on economic growth altogether.

HB: One really striking finding that really struck me in the OECD report, as an economist who focuses on policy issues, is that your analysis doesn’t conclude the policy solutions are really in the tax-and-transfer system. As you’ve already said, it’s really thinking about education, perhaps educational quality, health quality—things that might be a little bit harder to measure in terms of the effectiveness of government in some ways. After all, it’s easier to know exactly how much of a tax credit you’re giving someone versus the quality of a school or health outcome. You can certainly measure these outcomes, but it’s more challenging. It seems to me that a lot of the conclusions of this research are that it’s not enough to focus on taxes and transfers, but rather that we have to focus on these things that are harder, in the areas of education and health outcomes, focusing on people and people’s development. Would that be a fair assessment of the emphasis that this research is leading you to?

SS: To some extent, because I think both are important. Let me try to explain why. The first point is that more focus should indeed go into providing access to opportunities. And these are not just the standard indicators of how much countries spend on public and private education and health, but actually how people in different parts of the distribution have access to these services, and the quality of the services they receive. So I think the focus should be on promoting more equal access to opportunities, which means going beyond looking at access and quality of the services that individuals receive, especially those from the bottom 10 percent to the bottom 40 percent of the income distribution. This is much more difficult to measure, but I think we have to consider fundamental investment in human capital. And a lot of the policies should move toward providing more equal access to opportunities, at least in the key areas of education, health, and other key public services.

Now, the other point is that if you don’t address the inequality of outcomes then you can’t possibly address the inequality of opportunities. In countries in which the income distribution is so unequally distributed it’s difficult to think that individuals, despite public programs, can actually invest enough on their own to have access to the right opportunities. So, the two things are very much linked. By addressing some of the inequality in outcomes, you’re also able to promote better access to opportunity for individuals. So, I think it’s really working on both sides.

We are not the only ones who have been arguing for years that the redistribution effort of countries has diminished over time. It has diminished over the past three decades because of the decline in the progressivity of income taxes and because the transfer system in general has declined in terms of the overall level, even though some programs have become more targeted. But we’re not necessarily saying spend more in terms of redistribution, but spend well in terms of benefit programs. So I think the discussion becomes: Focus more on opportunities, address income inequality because this is a major factor in the lack of access to opportunities, but be very specific in what you do in terms of targeted transfer programs. In particular, focus on programs that have been evaluated and showed that they actually lead to good outcomes.

HB: I’d also like to talk about gender- and family-friendly policies. I noticed in the report that you talked a lot about the importance of reducing gender inequality on overall family inequality outcomes but also on the opportunity piece. Could you talk a little bit about the importance of these in terms of the research that you found?

SS: One of the most interesting results of our analysis in terms of the counteracting factors of the trend increase in income inequality across the wide range of OECD countries—including a number of the key emerging economies—has been the reduction in the gender gaps. The greater participation of women in the labor market has certainly helped to partially, but not totally, alleviate the trend increase in market income inequality. But as we know, there are gender gaps not only in labor market participation, but increasingly also in the quality of jobs that women have access to compared to men. Depending on the country, of course, there are large differences.

In that context, I’m very glad we have been supporting the Group of 20 Australian presidency in 2014, which brought a gender target into the forum of the G-20 leaders. Now, they have committed to reducing the gender gap in labor force participation by 25 percent by 2025. It’s only one step into the process, but I think it’s an important signal. We at the OECD also have the “Gender Recommendation,” a specific set of policy principles that all OECD countries have engaged in, with a fairly ambitious agenda on gender equality, focusing on education, labor markets, and also entrepreneurship.

Ensuring greater participation of women in the labor market remains a very important objective, but looking more into the quality of jobs that women have access to, and making sure that policy can facilitate women entering the labor market and also having a career is very important. And also allowing women to reconcile work responsibility with family responsibility. In that context, what we are doing perhaps more than in the past is looking not only at what women should be doing or have to do, but also at what men can do and should do. At the OECD, for example, we are looking specifically at paternity leave, because many of the decisions at the household level are taken jointly by the father and the mother. And I think while we have made some progress in changing the behavior of mothers, much remains to be done to also change the behavior of fathers.

We are looking at some of the interesting policies that a number of countries are implementing to promote paternity leave, for example by reserving part of the parental leave only to the father. Either the father takes the leave or it is not given to the household. And there are a number of interesting results. Some countries, such as Germany, have reserved part of the parental leave going to the father and have been able to increase the number of fathers who take parental leave by a significant proportion. Again, the focus should certainly be on all the different dimensions of inequality, including gender inequality, in the labor market, but also look at the interactions of individual behaviors of men and women and those of the companies in which they work. We are doing interesting research in the case of two countries that have very generous paternity leave, Japan and South Korea: 52 weeks. Yet, only 3 percent of the fathers take paternity leave. For male workers in these countries, absence from work because of family reasons is perceived to be a lack of commitment to the firm and employer and thus may have a negative impact on their careers; not surprisingly only a few men actually take paternity leave.

HB: One thing I know from my own research is that United States, of course, is different in that we tie in the states that have family leave, such as California, New Jersey, and Rhode Island, with New York soon implementing it, to our federal unpaid family and medical leave, which is 12 weeks of unpaid leave and which covers about 60 percent of the labor force. At both the state and federal level, the leave is tied to the worker. So, we already have a “use it or lose it” policy in place. So for children who are born to parents California, both the mother and the father have an equal amount of leave, but if dad doesn’t take it then the family doesn’t get it.

We’ve seen from research that once the leave was paid; men are increasingly taking it up. If the OECD hasn’t been looking at this in a cross-national comparative way, it might be an interesting case study or research project that I’ll just put out there in terms of how the United States has done. That’s the only thing that we’ve done right on paid leave vis-a-vis other OECD countries.

My last question here. Going back to the beginning of our conversation, the OECD research found that the rise of inequality between 1985 and 2005 in 19 countries knocked 4.7 percentage points off of cumulative economic growth between 1990 and 2010. That’s a big deal. The policy issues that we’ve been talking about are wide-ranging and possibly expensive, but also very important, it seems, for economic growth.

From where you sit in Paris at the OECD, are you seeing policymakers both at the OECD but also in member countries that you work with, really taking this research seriously? And are there any big questions that you think an organization like the Washington Center for Equitable Growth should be asking in our research to help show people what the evidence says? Are there sort of big questions that policymakers say, “Oh, I’ve seen this report, Stefano, but I don’t believe this piece of it.” Are there any avenues that you think we need to pursue where people are perhaps a little bit more skeptical if they’re not taking it as seriously as this research implies?

SS: These are all extremely relevant and very good questions, Heather. Let me start with the first point you raised; over a 30-year period, and across a wide range of OECD countries, high and increasing inequality has knocked down economic growth. Obviously this is not the last word and further research is needed. As we discussed, the identification of the effect and the links are not straightforward. We have used what we think is the best state-of-the-art economic techniques, but of course it’s not the last word. It would certainly help to work along these lines with a number of researchers to gather more evidence.

But it also is important for researchers to look at the channels through which increasing high levels of inequality might be detrimental for growth: namely by under-investment in broadly-defined human capital of the bottom 40 percent and therefore long-term economic growth. I think this is certainly an area in which there is a lot of scope for doing more work. In particular, there’s a lot of space for doing serious micro-based analysis looking at the extent to which individuals with low socioeconomic backgrounds actually have difficulties investing in quality education, quality human capital, and other areas.

Now, in terms of the work we have done— and the work that was done also by the International Monetary Fund and others— I think we are contributing somewhat to changing the narrative about inequality. For decades, there was a field of research on the economics of inequality, but this was largely confined to addressing inequality for social cohesion —for social reasons. We hope that now we have brought the issue of growing inequality toward the center of policymaking because inequality might be detrimental to economic growth, per se. So, even if you just want to pursue economic growth, you might want to be careful about increasing income inequality in your country.

We are not there yet, but I think there are a number of signals that indicate growing dissatisfaction in many countries, at least in part be driven by the fact that people don’t see the benefits [of economic growth] even amid a recovery. One of the more clear signals is that now the issue of inequality is discussed more openly in our meetings with colleagues from the fianance and economy ministries. In fact, the broad economic framework we’re now using at the OECD is inclusive growth. I would say that these are not things that would have been possible a decade ago. There is now a fairly consistent narrative around the need to pursue inclusive growth. And there is a strong focus on employment and social protection, and on investments in human capital.

I think the research community really needs to feed this discourse. There might be still some temptation as soon as economic growth resumes to revert back to traditional economic growth focus. And because there are a lot of fears and concerns about secular stagnation, the thinking could focus on growth because we need growth back, and we will deal with the distribution of the benefits of growth at a later stage. But, we should really have a comprehensive strategy that fosters a process of economic growth that is sustainable and deals with some of the underlining drivers of inequality.

HB: Thank you. And I will note our very first interview for this series “Equitable Growth in Conversation” was with Larry Summers, who actually talked about the importance of attending to inequality if we want to address secular stagnation.

SS: Exactly.

HB: This has been so enormously helpful and so interesting. We here at Equitable Growth really appreciate the work that you all are doing at the OECD and find it incredibly illuminating. Thank you so much for taking the time to talk to us today.

SS: Thank you, Heather. Thank you very much. It’s been a pleasure.

Environmental regulation and technological development in the U.S. auto industry

Introduction

In 2013, the U.S. Chamber of Commerce commissioned a study aimed at undermining the U.S. Environmental Protection Agency’s claims that it and other federal agencies’ regulatory efforts created thousands of jobs. Naturally, the study challenged EPA’s findings, and it did so by questioning not only the agency’s findings but also its methods—particularly EPA’s economic models—arguing that there was little evidence that the agency even tried to analyze the effects of its regulations on employment.1 This disagreement is hardly novel. Anti-regulatory efforts often highlight the job-stifling effects of regulation while pro-regulatory positions often argue in favor of job growth and overall economic growth as a benefit of regulation. The debates often hinge on profoundly different economic models of employment—and employment patterns are notoriously complicated to model in the first place.

Download FileEnvironmental regulation and technological development in the U.S. auto industry

Read the full pdf in your browser

Rather than add to this noise about economic modeling, in this paper I will discuss the role of regulation in technological development, looking specifically at the automobile industry. New technologies are clearly related to economic growth, but modeling that relationship is complicated and actually requires consideration of “counterfactuals,” or assessing whether certain technologies would have emerged without regulation. Considering the question of how new technologies developed in response to regulation and then how those technologies affect job growth is even more complicated and beyond the scope of this paper. That’s why this paper focuses on the question of how regulation, technological development, and economic growth are linked by examining a multi-part, historical case study of the automobile industry and environmental regulations.

Exciting new series examines whether history can help us understand how technology impacts growth & inequality! https://t.co/wvEW2HO1xH

— Equitable Growth (@equitablegrowth) November 18, 2015

This paper considers the role of initial efforts in California to address the problem of smog and the ways in which that relatively small-scale regulation subsequently modeled and then fed a national-scale regulatory effort leading to the creation of the U.S. Environmental Protection Agency and the Clean Air Act and its amendments around 1970. This story, however, only begins with the Clean Air Act and the EPA because things got more interesting as emissions-control technologies took on a life of their own in the ensuing decades. Engineers started with a variety of ways to meet the new environmental regulations of the 1970s, but by the 1980s, controlling the emissions of the passenger car became an interesting engineering problem unto itself and led to an increasingly important role for electronics in the automobile. This period is also explored in this report.

The paper finds that regulation played an important catalytic role—it forced automobile companies to start to address emissions and air pollution, a move unlikely without regulation—but also that the ensuing thread of technological innovation gained a life of its own as engineers figured out how to optimize the combustion of the automobile in the 1980s and ‘90s, specifically using computer technology. In short, what began as a begrudging effort to reduce harmful emissions evolved into a successful reshaping of the automobile into a computer with an engine and wheels.

Technology-forcing regulation, emerging technologies, and the entrepreneurial state

In this section, I will lay out three ideas that will guide the forthcoming case study. The first is that regulation aimed at reducing air pollution in the 1970s was designed to force the auto industry to develop new devices to control vehicle emissions and exhaust—it was not a specification of what these devices might be or how they should operate. This approach of forcing firms to develop new technologies that will meet certain desired outcomes is a common feature of concerns about emerging technologies in the 21st century.

Second, an examination of the analyses aimed at unpacking the challenges faced by engineers who are obliged to develop emerging technologies is useful when considering the aims and effectiveness of technology-forcing regulation, as I do in this paper. And lastly, I will bring into the analysis the “entrepreneurial state” analytical framework of Mariana Mazzucato, a noted professor of the economics of innovation at the Science Policy Research Institute of the University of Sussex, as a way to consider the role of the state in encouraging technological development. Mazzucato writes about the various roles of the entrepreneurial state in building institutions to create long-term economic growth strategies, “de-risk” the private sector, and “welcome” or cushion short-term market failures.2 Rather than a laissez-faire state, an active, entrepreneurial state eases the route to economically important innovations produced by the private sector.

Technology-forcing regulation

Economists and others refer to legislation with a goal of forcing industry to develop new solutions to technological problems as “technology-forcing” regulation.3 The Clean Air Act of 1970, which targeted both stationary and mobile/non-point air pollution sources, is probably the most famous piece of technology-forcing legislation in U.S. history. The act specified that various industries meet standards for emissions for which no technologies existed when the act was passed. The Clean Air Act set standards that firms had to meet; importantly, the technology to be used to meet the standards was not specified, allowing firms to either develop or license a new device to add to the technological system in order to meet the stated criteria. Under this sort of technology-forcing regulation, both the electrical power utilities and the automobile industry developed a wide array of new exhaust-capturing and emission-modifying devices.

The legislative strategy of writing regulations that presented standards for which the current in-use technologies were insufficient was upheld by the U.S. Supreme Court in 1976 in the case of Union Electric Company v. Environmental Protection Agency. In this case, Union Electric challenged the Clean Air Act’s provision that a state could shut down a stationary source of pollution in order to force it to comply with a standard for which a feasible, commercial mediation technology did not yet exist.4 The U.S. Supreme Court upheld the legislation; states could set unfeasible emission limits where it was necessary to achieve National Ambient Air Quality Standards, which set limits on certain pollutants in ambient, outdoor air.5 Not upholding the statute would have opened the door to firms moving to locations with better air quality in order to emit more pollution and still keep the measured pollutants under the limits. This was where performance-based standards met technology-forcing regulations.

Emerging technologies

When the Clean Air Act was amended in 1977, Part C of the statute was dedicated to elaborating the regulation that said new sources in locations must meet or exceed National Ambient Air Quality Standards by using the “best available” technology. Part D stipulated that new plants in locations that did not meet these standards had to comply with the lowest achievable rate. There would be no havens for polluters under the Clean Air Act. These regulations spurred the development of commercially viable “scrubbers,” which remove sulfur from flue gases with efficiencies in the 90 percent range and were developed around 1980. The effort to use regulation to force power plants to develop technology to reduce smokestack pollution worked. There were all sorts of economic effects that require complicated cost-benefit analysis over the subsequent decade or so, but the regulation did what it intended in terms of forcing technological development to meet the needs of a cleaner environment.

In-use and emerging technologiesStepping back from the specifics of clean air regulations for a moment, let’s examine the ways in which already-in-use technologies can be modified by regulations. It may be helpful to compare and contrast in-use technologies or mature technologies with new or emerging technologies. The contrast between the two isn’t as strong as the vocabulary might suggest. Emerging technologies are simultaneously full of promise and danger, while mature technologies seem to be more predictable. The promises of a mature technology are manifest and the dangers seemingly under control. The term “emerging technology” is only used as an indicator that these technologies have the potential to go either way—toward a utopian or dystopian future. When change is easy, the need for it cannot be foreseen; when the need for change is apparent, change has become expensive, difficult, and time-consuming. Emerging technologies are often considered a particularly challenging regulatory problem. The “Collingridge dilemma” in the field of technology assessment—named after the late professor David Collingridge of the University of Aston’s Technology Policy Unit—posits that it is difficult, perhaps impossible, to anticipate the impacts of a new technology until it is widely in use, but once it is in wide use, it is then difficult or perhaps impossible to control the pace of change (regulate) of the technology precisely because it is in wide use.6 Nowadays, it is often common in economic and political rhetoric to hear of the speed of technological development as a further obstacle to effective regulation. |

Air quality regulation, in particular, was reactive rather than prophylactic in the case of air pollution coming from both the internal combustion engine and stationary sources such as electrical power plants. But it was also a long process that was highly responsive to both changing understandings of the chemistry of air pollution and to the development of a long list of diverse, new, pollution-reduction technologies. In the 1970s, when many of these technologies were developed, demonstrated, and ultimately introduced into the marketplace, the political rhetoric about regulation was less contentious than it is today. Regulation was seen as a legitimate role for the state and even companies who would be affected by regulations demanded that regulations be settled on. Uncertainty about regulation could be seen as a greater threat than regulation themselves.

Seeing in-use technologies as having common features with emerging technologies opens up a useful space to consider technological change. In what sense is today’s automobile the same technology as the carbureted, drum-brake-equipped, body-on-frame vehicle of the 1960s, let alone the hand-cranked Model T of 1908? There are many continuities, of course, in the evolution of the Model T to the present-day computer-controlled, fuel-injected, safety-system-equipped, unibody-constructed car. But looking at today versus 1908, one might argue that there’s actually little shared technology in the two devices. I would suggest that the category of mature technology isn’t an accurate one if “mature” implies any sense of stasis. As these technologies developed, there was definitely a sense of path dependency—that modifications to the technology were constrained by the in-use device.

This dynamic was especially true in systems that the driver interacted with, such as the braking system. Engineers working on antilock braking systems in the 1960s took it as a rule that they could not change the brakes in any way that would ask drivers to change their habits.8 But they were much more dynamic than the term “mature” implies and certain sub-systems—among them microprocessors, sensors, and software—were, in fact, classic, enabling emerging technologies.

So what happens if a mature technology like the automobile is treated like an emerging technology for regulatory or governance purposes? Perhaps the Collingridge dilemma should be taken seriously for any regulatory process and shouldn’t be focused on the uniqueness of regulating emerging technologies? The impacts of regulation can be as hard to anticipate for technologies that “emerged” as they are for technologies that are new to the market. The case of the automobile demonstrates this dilemma, since no one at the time anticipated the computerization of the car as an outcome of emissions and safety regulations in the 1970s.

Looking at game-changing regulation of in-use technologies does show, though, that Collingridge overstated the (inherent) difficulty of changing technologies once they’re on the market. The automobile’s changes since the 1970s are significantly driven by the regulation of technologies emerging in the 1960s and 1970s; in hindsight, the technology was changeable in far more ways than the historical actors imagined. This is an optimistic point about technological regulation—technologies are never set in stone.9

One difference between emerging technologies and automobiles is that the economic actors and stakeholders are much clearer in the case of cars. This means that government regulators need to work in concert with known industrial players. Regulation constitutes a collective effort between firms and regulatory agencies. This doesn’t mean the process isn’t antagonistic; in fact, because the interests of the state and private firms don’t align, the process is typically very contentious. But the way forward is not to eliminate or curtail either the state or private industry’s role. The standard-setting process itself is a critical one.10 The role of government, though, should be thought of as more entrepreneurial than bureaucratic in the case of technology-forcing regulation.

The role of government regulation in technological development

Mariana Mazzucato’s concept of the “Entrepreneurial State” offers a framework for thinking about how governments in the 21st century are able to facilitate the knowledge economy by investing in risky and uncertain developments where the social returns are greater than the private returns.11 States typically have three sets of tactics for intervening in technological development:

- Direct investment in research or tax incentives to corporations for research and development

- Procurement standards and consumption

- Technology-forcing regulation

Mazzucato’s book focuses on the first, but the other two played equally important roles in the second half of the 20th century.

Because there are multiple modes of state action, the state plays a central role in fostering and forcing the invention of new devices that offer social as well as, in the long run, private returns. My focus here is obviously on the role of regulation as a stick to force firms to develop in-use technologies in very particular ways. But it is important to recognize the way the federal government wrote the legislation that forced technological development. Lee Vinsel, assistant professor of science and technology studies at the Stevens Institute of Technology, calls these technology-forcing regulations “performance standards” because they were written to specify both a criteria and a testing protocol so that firms have wide latitude to design devices that could be considered to meet the criteria through standardized tests.12 Armed with the ideas that technologies are never static and mature and that the state plays critical and diverse roles in fostering and forcing new technologies to market, let’s now turn our attention to the phenomena of emissions regulations for automobiles since the late 1960s.

Setting standards for automobile emissions

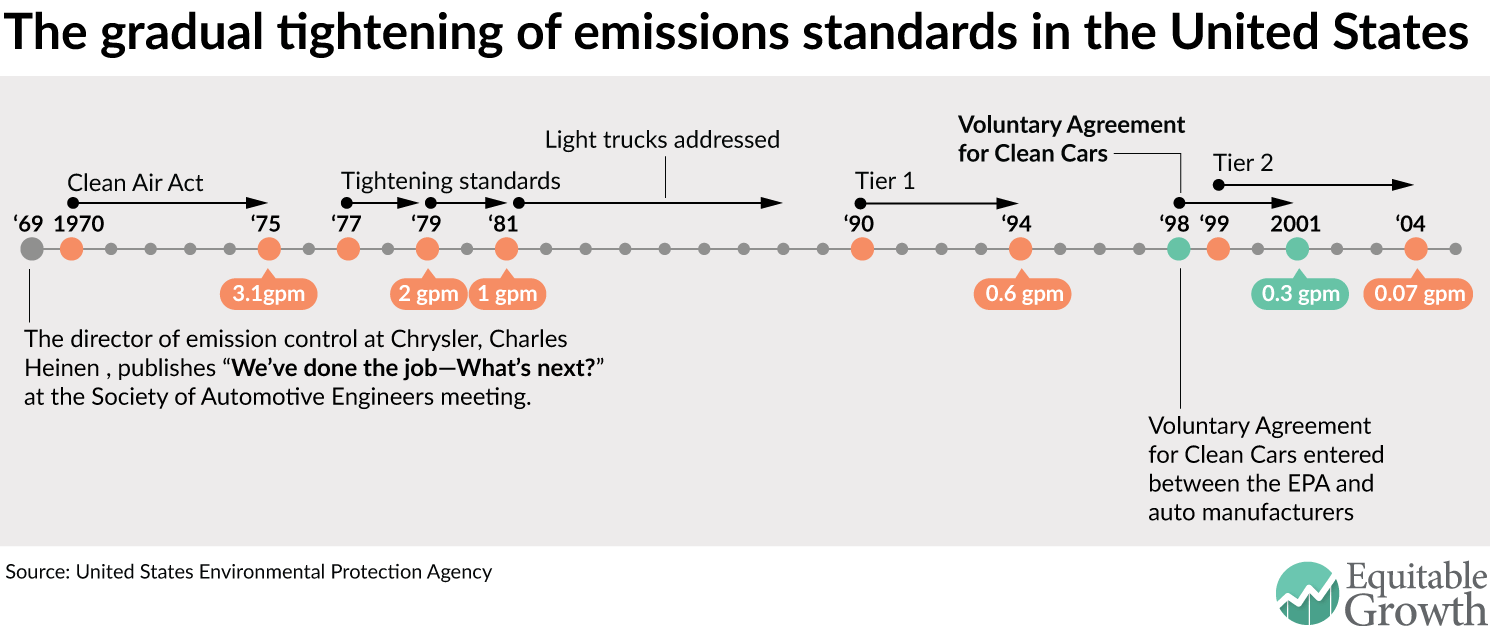

While I could provide a long timescale picture of the car’s development, my focus here is on the gradual computerization of the automobile in response to regulatory efforts to make it both safer and less polluting. Computer control of various functions of the automobile began first with electronic fuel injection and then antilock brakes in the late 1960s.13 The most important developments in electronic control occurred with the development of emissions-control technologies in the 1970s and 1980s, so that by about 1990 the automobile was being fundamentally re-engineered to be a functional computer on wheels. (See Figure 1.)

Figure 1

The implementation of these new systems was fairly gradual and followed a typical pattern of initial installation on expensive models that then trickled down to cheaper ones. Standardized parts and economies of scale are critical manufacturing techniques in the automobile industry, so there was and is often an incentive to use the same devices across different models. Therefore, when California led the creation of auto emissions regulations, the state was a large enough market that automobile manufacturers could almost treat the state standards as national ones. There was no way the auto industry would benefit from different emissions standards set state by state. Large markets such as California created an incentive for the automobile industry to want national standards instead of state-by-state regulation, even if some states might create much lower and easily achieved goals.14

The types and forms of clean air regulations were important to making them effectively force technological development. Regulations had to be predictable because dynamic standards would create higher levels of uncertainty, which makes firms nervous and unhappy. Technical personnel often advocated standards that could increase as new technologies were developed and implemented—engineers, in particular, often exhibited an epistemic preference for the “best” (the highest achievable) standards. This stance was particularly common for air pollution as there was no clear public health threshold about how much of nitrogen oxides or sulfur dioxide was dangerous or harmful. Many engineers thought the technologies should constantly improve and remove ever more chemicals known to be harmful to human lungs and ecosystems.

This debate about whether standards should either be static or instead increase with the development of ever more complicated technologies wasn’t just occurring inside government. In 1969, the director of emission control at Chrysler, Charles Heinen, presented a rather controversial paper titled “We’ve done the job—What’s Next?” at the Society of Automotive Engineers meeting that January. In the paper, Heinen lauded auto manufacturers for making exactly the needed improvements to clean up emissions and then pronounced the job done. Clearly speaking for the automotive industry, Heinen also questioned the health effects of automobile emissions, writing, “automobile exhaust is not the health problem it has been made out to be.”15 He argued that since life expectancies for Los Angeles residents were equal to or greater than national averages, the concentration of exhaust and the prevalence of smog could not be conclusively called dangerous to health. Furthermore, he argued that any further reductions in emissions would come only at a high cost—pegging the national cost to develop such technologies at $10 billion, and claiming that further reductions would likely come at the cost of decreased fuel economy for the user.

Heinen was positioning the industry against what he called catalytic afterburners to further burn off carbon dioxide, nitrogen oxides, and hydrocarbons. Yet some government regulators were specifically trying to force the development of catalytic afterburners or converters. The result was a victory for the regulators—by 1981, the three-way catalytic converter would become the favored technology to meet precisely the kind of escalating standards Heinen was worried about.

But it was a victory for quite a number of engineers, too. Phil Myers, a professor of mechanical engineering at the University of Wisconsin and president of the Society of Automotive Engineers in 1970-71, presented a paper in 1970 titled “Automobile Emissions—A Study in Environmental Benefits versus Technological Causes,” which argued that “we, as engineers concerned with technical and technological feasibilities and relative costs, have a special interest and role to play in the problem of air pollution.”16 He wrote that while analysis of the effects of various compounds wasn’t conclusive in either atmospheric chemistry or public health studies, engineers had an obligation to make evaluations and judgments.

To Professor Myers, this was the crucial professional duty of the engineer—to collect data where possible but to realize that data would never override the engineer’s responsibility to make judgments. He strengthened this position in a paper he presented the following year on “Technological Morality and the Automotive Engineer,” in a session he organized on Engineers and the Environment about bringing environmental ethics to engineers. Myers argued that engineers had a moral duty to design systems with the greatest benefits to society and not to be satisfied with systems that merely met requirements. At the end of his first paper in 1970, Myers also made his case for the role of engineers in both technological development and regulation, writing:

At this point, it would be simple for us as engineers to shrug our shoulders, argue that science is morally neutral and return to our computers. However, as stated by [the noted physician, technologist, and ethicist O. M.] Solandt, “even if science is morally neutral, it is then technology or the application of science that raises moral, social, and economic issues.” As stated in a different way by one of the top United States automotive engineers, “our industry recognizes that the wishes of the individual consumer are increasingly in conflict with the needs of society as a whole and that the design of our product is increasingly affected by this conflict.”17

Myers then cited atmospheric chemist E.J. Cassell’s proposal to control pollutants “to the greatest degree feasible employing the maximum technological capabilities.”18 For Myers, the beauty of Cassell’s proposal was its non-fixed nature—standards would change (become more stringent) as technologies were developed and their prices reduced through mass production, and when acute pollution episodes threatened human well-being, the notion of feasibility could also be ratcheted up. Uncertainty for Myers constituted a call to action whereas to Heinen it was a call to inaction. Heinen would have set fixed standards as both morally and economically superior—arguing “we’ve done the job.”

Myers also took a position with regard to the role of the engineer to spur consumer action. He wrote:

…our industry recognizes that the wishes of the individual consumer are increasingly in conflict with the needs of society as a whole and that the design of our product is increasingly affected by this conflict. This is clearly the case with pollution—an individual consumer will not voluntarily pay extra for a car with emissions control even though the needs of society as a whole may be for increasingly stringent emissions control. …there is universal agreement that at some time in the future the growth of the automobile population will exceed the effect of present and proposed controls and that if no further action is taken mass rates of addition of pollutants will rise again.19

Myers was arguing that federal emissions standards should be written such that they can become more stringent in the near future but also that regulation was a necessary piece of this system because it would serve to force the consumer’s hand (or to put it another way, to force the market). According to Myers, engineers were the ideal mediators of these processes.

In response to these debates, which occurred among government regulators, industry experts, and technical professionals, the Clean Air Act and its subsequent amendments set multiple standards.20 Fixed, performance-based standards, which changed more often than Myers would have predicted, became the norm, but only after a disagreement about whether to measure exhaust concentration or mass-per-vehicle-mile. The latter was written into the Clean Air Act and remained the standard, but not without attack from those who argued that the exhaust concentration was a better proxy for clean emissions.

The initial specified standards under the Clear Air Act covered carbon monoxide, volatile organic compounds (typically unburned hydrocarbons or gasoline fumes), and nitrogen oxides. Looking only at nitrogen oxides as an example, the standards became significantly tougher over 30 years. The initial standard for passenger cars was 3.1 grams per mile, which automobile manufacturers had to show they had achieved by 1975.21 With the 1977 amendments, the requirement would drop to 2 gpm by 1979 and to 1 gpm by 1981.

The 1990 Clean Air Act Amendment set a new standard called “Tier 1,” which specified a 40 percent reduction and moved the standard for nitrogen oxides emissions in passenger cars down to 0.6 gpm. Then, in 1998, the Voluntary Agreement for Cleaner Cars between the EPA, the automobile manufacturers, and several northeastern states again reduced the nitrogen oxides goal by another 50 percent to 0.3 gpm (just 10 percent of the original standard from 1975) by 2001. This agreement was unusual in that it was not mandated by the Clean Air Act but would nonetheless affect cars nationally.

At the same time as the Voluntary Agreement for Cleaner Cars was unveiled, the EPA issued the Tier 2 nitrogen oxides standards, which reduced the standard to 0.07 gpm by 2004. Tier 2 also specified a change in the formulation of gasoline so that these and other standards were more readily achievable. So in the 30 years between 1975 and 2005, nitrogen oxides emission standards were reduced by approximately 98 percent. What did auto manufacturers have to do to meet these seemingly draconian standards?

Meeting emissions standards: The catalytic converter

The general strategy of automobile engineers for meeting California standards in the 1960s and federal standards in the first half of the 1970s was to add devices to the car to catch, and often reburn or chemically transform, unwanted exhaust and emission gases22. The first several add-ons were to reduce unburned hydrocarbons (gasoline fumes), which were targeted in 1965, before the Clean Air Act, by the Motor Vehicle Air Pollution Control Act. Once the focus shifted to nitrogen oxides in the 1970s, the challenge for engineers was to invent technologies that didn’t undo a decade’s worth of hydrocarbon reduction. Nitrogen oxides are formed through an endothermic process in an engine and are produced only at high combustion temperatures, greater than 1,600 degrees centigrade. Therefore, one key to reducing them was reducing the temperature of combustion, but in the 1960s, combustion temperatures had often been increased to more completely burn hydrocarbons. Reducing combustion temperatures threatened to increase unburned hydrocarbons.

The solution was to invent a device that would only engage once the engine was hot and would at that point reduce combustion temperature. The first of these devices was the engine gas recirculation system—a technology that required a temperature sensor in the car’s engine to tell it when to engage. This wasn’t the first automotive technology reliant on sensors, but sensors of all kinds would become increasingly important in the technologies developed to meet the ever-rising nitrogen oxides standards. Sensors were also not as reliable as either automobile manufacturers or car owners wanted, and the engine gas recirculation system was the first of many technologies best known to drivers by lighting up the dashboard “check engine” indicator. The new technology was useful but it failed to offer enough change in the chemistry of combustion to meet the falling nitrogen oxides allowances. A more complex add-on device was in the near future.

Members of the Society of American Engineers first started to hear about devices to transform vehicle exhaust gases using catalysts in 1973. European and Japanese engineers were very common in these sessions, as they were convinced that catalytic emissions technologies were going to be required by their governments (in contrast to the performance-standards approach already in place in the United States). It wasn’t that U.S.-based engineers opposed catalytic devices, but they knew that the EPA was concerned with the testable emissions standards rather than the use of any particular device.

The catalytic converters of the 1970s could transform several different exhaust gases. Engineers were particularly interested in the transformation of carbon monoxide into carbon dioxide, changing unburned hydrocarbons into carbon dioxide and water, and dealing with nitrogen oxides by breaking them apart into nitrogen and oxygen. The converters presented great promise for these reactions, and converters that performed all three reactions were called three-way catalytic converters.

But catalytic converters also presented challenges to auto manufacturers. The catalysts in them were easily fouled; that is, other materials bonded with the catalysts and prevented them from catalyzing the reactions they were supposed to generate. The most common fouling substance was lead, which in the 1970s was added to gasoline to prevent engines from knocking. Lead had to be removed from gasoline formulations if catalytic converters were to be used. This had to be negotiated between the federal government, the oil industry, and the automobile industry, none of which were satisfied with the solution.

Second, the catalytic converters depended on a reliable exhaust gas as an input. This meant that to use the converter on different cars, it had to receive the same chemical compounds, and therefore each model of vehicle had to have the same pre-converter emissions technologies.

Lastly, the catalytic converter depended on a consistent combustion temperature, which required more temperature and oxygen sensors in the engine. Along with electronic fuel injection, which was increasingly replacing carburetion as a technique for vaporizing the car’s fuel (mixing fuel and air) as it entered the intake manifold or cylinder, engine gas recirculation systems and catalytic converters were the chief engine technologies that were driving the move to computer control in the car. All three of these technologies depended on data input from sensors, logic control, and relays that engage the system under the right conditions.

Reconceptualizing combustion

Once the catalytic converter was introduced and increasingly made standard in the late 1970s and 1980s, work to further reduce nitrogen oxides emissions depended on either continuous improvements to the catalytic converter or the addition of new technologies. But many engineers, especially those working on more expensive models who had more opportunity to design new systems and not resort to “off the shelf” emission-reduction technologies that were becoming more and more common, were dissatisfied with the Rube Goldberg-like contraption that the car’s emissions control was becoming. There had to be a better design that would make the targets achievable. The promise lay in managing the whole combustion process—not once in a while to turn on an engine gas recirculation system, but continuously to optimize combustion with respect to undesirable emission compounds. Developing the technologies to manage combustion electronically took another decade.

Combustion management started to appear with the gasoline direct injection approach, which began development in earnest in the 1970s and started to appear on the market in the 1990s.23 Gasoline direct injection required the car to have a central computer or processing unit to read input data from a series of sensors and produce electric signals to make combustion as clean as possible, especially focused on the production of nitrogen oxides. Gasoline direct injection changed the ratio of fuel to air continuously. A warm engine under no load could burn a very lean mixture of fuel, while a cold engine or an engine under heavy load needed a different mixture to keep nitrogen oxides at the lowest possible level.

Yet early introductions of this new technology, such as Ford’s PROCO system on the late 1970s Crown Victoria, failed to meet increasingly stringent standards and production of the system ended by being canceled. It took well over a decade to mature the gasoline direct injection technology into a system that could help achieve the Tiers 1 and 2 nitrogen oxides standards.

Other technologies aided in the approach that combustion could be managed to optimize output under widely different conditions. Variable Valve Timing and Lift systems, which are often characterized generally as VTEC systems even though that’s the trademarked name of Honda’s system, custom control the timing and degree of an engine’s valves opening. These are mechanically and electronically complex systems that can improve performance and reduce emissions and which require data analysis by a computer processing unit. They were introduced in the late 1990s.

By the 1990s, clean combustion was the goal of automotive engine designers. The car should produce as few exhaust gases as possible, beyond carbon dioxide, water, and essentially air, composed primarily of oxygen and nitrogen. The carbon dioxide output is really the issue today, as it is now the primary pollutant produced by the automobile. It’s not that earlier engineers characterized it as inert but rather that combustion will inevitably generate carbon dioxide in quantities far too large to either capture or catalyze. There is no catalytic path to eliminating carbon dioxide after the combustion cycle, and combustion necessarily produces carbon dioxide. And capturing it presents insurmountable problems due to volume and the obvious question of storage. Already in 1970, Phil Myers did, perhaps surprisingly, raise the question of carbon dioxide: “increasing concern about [CO2’s] potential for modifying the energy balance of the earth, that is the greenhouse effect.” And Myers called CO2 the automobile’s “most widely distributed and abundant pollutant.”24 The internal combustion engine is more than a carbon-dioxide-producing machine, but it is nothing less than that either.

The recent scandal over Volkswagen’s computerized defeat device falls directly into this concern over computer control of combustion and its output of nitrogen oxides, although the engines currently under scrutiny with VW are diesel-powered and not gasoline engines, and are therefore subject to different Clean Air Act regulations and involve some different systems and technologies than those that are described in this paper. Still, it is not surprising that nitrogen oxides are the pollutants that the VW engines produce in excess of regulatory levels.

The technologies designed since the 1970s to produce such a striking reduction in nitrogen oxides all presented trade-offs with vehicles’ performance and gas mileage. Gas mileage is also the subject of federal regulation, but the corporate average fuel economy standards, or CAFE standards, only specify an average for an entire class of vehicles. Volkswagen’s choice to implement software that would override the nitrogen-oxides-controlling systems except when a vehicle was under the precise conditions of testing was a choice to privilege what they perceived as customers’ preferences over meeting U.S. requirements for pollution emission. There’s much to say about VW, but little of it involves the subject of this paper—the role of regulation in generating socially desirable innovations.

Conclusion: Regulation, new technologies, and economic growth

The purpose of the paper was to analyze the historical interactions between regulation and technological development. In the case of automotive innovations, it is clear that high emissions standards did force the development of new technologies by jumpstarting a quest to improve the car, to make it less environmentally taxing and harmful to human health. More importantly, the continually escalating emissions standards, exemplified here by increasingly stringent nitrogen oxides standards, led to fundamental changes in the car that made it not only less polluting but also more reliable as a (largely unanticipated) byproduct of computerization.

Cars also have become much safer through regulation, with deaths per miles driven dropping from approximately 20.6 deaths per 100,000 people in 1975 to about 10.3 per 100,000 in 2013—a drop of 50 percent.25 Few of the technologies used to improve the car existed prior to the enactment of legislation.26 Technology-forcing regulations can be effective and the opposition of industries affected by them is usually temporary, only a factor until new technologies are available. Given the complexity of many of today’s technological challenges, especially those in the energy sector and involving climate change, the U.S. government needs to consider its role as a driver of technological change.

The link between regulation and technological innovation in automobiles has had a variety of complicated effects on economic growth and the labor market—from creating new jobs to produce new automotive electronic technologies to changing the economic environment of the repair garage. This process of continuous innovation in response to regulation has continued since the 1990s, and now we face the challenges and opportunities of self-driving cars or autonomous vehicles. The adoption of these new technologies for a new kind of car over the next decades will require major changes in legislation, in driver behavior and attitudes, and of course in technologies themselves, including large technological systems. All of these will have important economic effects, some of which are fairly predictable, others of which are not, but all of which will certainly be politicized by interested parties.

About the author

Ann Johnson is an associate professor of science and technology studies at Cornell University. Her work explores how engineers design new technologies, particularly in the fields of automobiles, nanotechnology, and as a tool of nation-state building.

Acknowledgements

Special thanks to W. Patrick McCray, David C. Brock, Lee Jared Vinsel, James Rodger Fleming, Adelheid Voskuhl, and Cyrus Mody for conversations and comments, and the other members of the History of Technology workshop at the Washington Center for Equitable Growth, especially Jonathan Moreno and Ed Paisley.

Emerging technologies, education, and the income gap

Visit the full index of our History of Technology series.

Overview

Technologies and industries do not emerge in isolation—as science and technology professors Braden R. Allenby and Daniel R. Sarewitz observe in their book “The Techno-human Condition”—but instead are coupled with complex social and natural systems. “Coupled” means that a change in one situation, such as the emergence of a new technology, will cause changes in both the social and natural systems.27 “Complex” means that these changes are hard to predict because of the nature of complex systems. Ever-greater complexity defines today’s emerging technologies, and as they emerge, they could end up supporting a social system that emphasizes merit or instead one that makes it more difficult for low- and middle-income people to achieve success.

The question is how to create social systems that maximize the opportunity for those not at the very top of the economic spectrum to succeed. Income disparities interfere with economic growth, argues Nobel Prize-winning economist Joseph Stiglitz, in part because talented, motivated people who could add value to society are not given a fair shake at showing what they could do.28 The “History of Technology” series published by the Washington Center for Equitable Growth over the past few months delves into where the introduction of new technologies in the recent past resulted in more equitable economic growth and sustained job creation, opening avenues for many workers to contribute meaningfully to economic growth—even though the disruptions to social systems caused by these technologies were often jarring and widespread.

In my closing essay for this series, I will hazard some thoughts about how newly emerging technologies today may play out in our social and natural systems, examining in particular how new educational systems could help ensure the wide and widening income gap in the United States is reversed rather than attenuated by the complexity of new technologies. I take some comfort from the presentation of the other papers in this series and my own view of the emerging use of telephones nearly a century and a half ago that the net result of today’s emerging technologies will be positive. Yet I also see there are other, less benign paths that our society and nation could take when one looks at today’s technologies.

The case of the telephone

Most inventors in the early 1870s thought that the “reverse salient” in communications technology was bandwidth, meaning that only two messages could be sent down the same telegraph wire at the same time.29 Alexander Graham Bell thought the transmission of speech would be transformative, and after a long series of relatively simple experiments, he obtained a patent for any device that would produce what he called an undulatory (sinusoidal) current.30 Speech was of no interest to Elisha Gray—Bell’s rival—and Gray’s backers at Western Union, nor to Thomas Edison, who developed a superior telephone transmitter but was blocked by a patent obtained by the new Bell Corporation. Western Union then turned down the opportunity to buy the fledgling Bell Corporation, and the latter’s telephone gained market share over the former’s telegraph system, especially after the invention of the switchboard.

Were telegraph operators deprived of jobs because of the switch to a new technology? Telegraph operating was a prized skill in the early 1870s, much like modern-day web design. Telegraph operators like Edison in his youth could show up in almost any city and get a job. The need for such operators declined over the period of the telephone’s growth, but telegraphy had always been a technology needed by many for occasional use—except for businesses and government—whereas the telephone was a technology needed by many and used far more often because one could do it from one’s home, not a telegraph office.

One of the best outcomes of telephony was a growth in employment for women, who had trouble breaking into the business of operating telegraphs but were far more successful at taking over the operation of telephone switchboards. Women also became heavy users of the telephone.31 So the telegraph-to-telephone transition was not disruptive for employment even though it did lead to the rise of the Bell Telephone Company and the decline of Western Union and slowly changed the way people communicated with each other.32

Telephones were originally owned mostly by the wealthy, as illustrated in 1936 when the Liberty Digest infamously predicted that Republican presidential candidate Alf Landon would defeat Franklin Delano Roosevelt in a virtual landslide, based on a poll of telephone users. That year, 60 percent of the highest wage earners in the United States had telephones but only 20 percent of the lowest earners—and those who were wealthy were more inclined toward Landon. By 1941, 100 percent of families making $10,000 or more had phones, as opposed to only 12 percent of families making less than $500. By 1970, only the poorest American households did not have phones.33 As a result, telephone polling because much more accurate.

Emerging technologies as of 2015

Carl Benedikt Frey and Michael A. Osborne, both of the University of Oxford, argue that computers have hollowed out middle-income jobs, with the lower income going to services and the higher incomes going to people who work with computers and people on functions such as management.34 This pattern will change as computers do lower-end service jobs. Websites are already replacing the function of travel agents, robots can automate manufacturing and potentially much of the fast food industry, and self-driving vehicle technology could eliminate the need for truck drivers. The roles of cashiers and telemarketers might be replaced by software that could handle a wide range of verbal queries. Even infrastructure jobs are at risk as robot manufacturing plants could build bridges designed to be dropped into place, and perhaps automated trucks could drive them there, potentially reducing the number of humans involved. According to Frey and Osborne, 47 percent of existing jobs may disappear as a result.

These changes will exacerbate the rich/poor divide because high-skill and high-wage employment is one of the classes of jobs that cannot be automated. These jobs depend on social intelligence such as the ability of chief executives to confer with board members, coordinate people, negotiate agreements, and generally run the affairs of companies.35 Before the stock market collapse in 2008, CEOs in the United States were paid on average 240 times the salary of workers in their industries—on the grounds that it took this kind of pay to attract and retain this kind of talented leadership.36 Is the social intelligence of a CEO worth this kind of premium?

CEOs benefit from what the 20th century American sociologist Robert K. Merton labeled the Matthew effect: “the rich get richer at a rate that makes the poor become relatively poorer.”37 Merton was thinking primarily about scientists; those awarded Nobel prizes get much more credit for their contributions than relatively unknown scientists who make similar contributions. As one of the laureates Merton interviewed said, “The world … tends to give the credit to [already] famous people.”38 “The world” in this case refers to the social world in which the scientists do their work, including their peers, the funding agencies, the press, policymakers, and politicians. A similar phenomenon may exist in the case of the CEO who went to the right business school, made the right connections, burnished her or his reputation at every opportunity, and finally arrived at the top.

Is what a CEO knows really 200 times more valuable than what the average worker knows? For the late Steve Jobs of Apple Inc., Bill Gates of Microsoft Corp., and the legendary investor Warren Buffett, it probably is. But then there are corporate executives like Ken Lay and Jeff Skilling, who took over a successful pipeline company they renamed Enron and ruined it because they encouraged unethical business practices that masked whether their company was really making any money.39 Similarly, Bernie Ebbers, the CEO of WorldCom, accumulated huge debts by growing his company through acquisitions until there was nothing else to acquire, and then his accountants started fudging results so the stock price would stay high.40

Indeed, in both of these cases and in many others, such as the collapse of Value America, the CEOs focused on keeping the stock price high instead of paying attention to whether their companies were actually making money.41 All were at one time or another praised as business geniuses. The social intelligence possessed by some CEOs may be the ability to create their own Matthew effects through relentless self-promotion.

Convergent technologies to enhance human performance

Nanotechnology in combination with advances in medicine, information technology, and robotics will be transformative.42 Machine learning algorithms are now trading on Wall Street at nanosecond speed, far beyond the capacity of the human nervous system, which suggests that human traders will soon be obsolete.43 Consider the possibility of reproducing organs on 3-D printers, or neural-device interfaces that allow the brain to control devices, or developing cochlear implants that enhance hearing, or extending the human lifespan through a combination of technologies, including genetic modification of human embryos.44