This is a weekly post we publish on Fridays with links to articles that touch on economic inequality and growth. The first section is a round-up of what Equitable Growth published this week, and the second is work we’re highlighting from elsewhere. We might not be the first to share these articles, but we hope by taking a look back at the whole week, we can put them in context.

Equitable Growth round-up

This week, Equitable Growth released three new working papers:

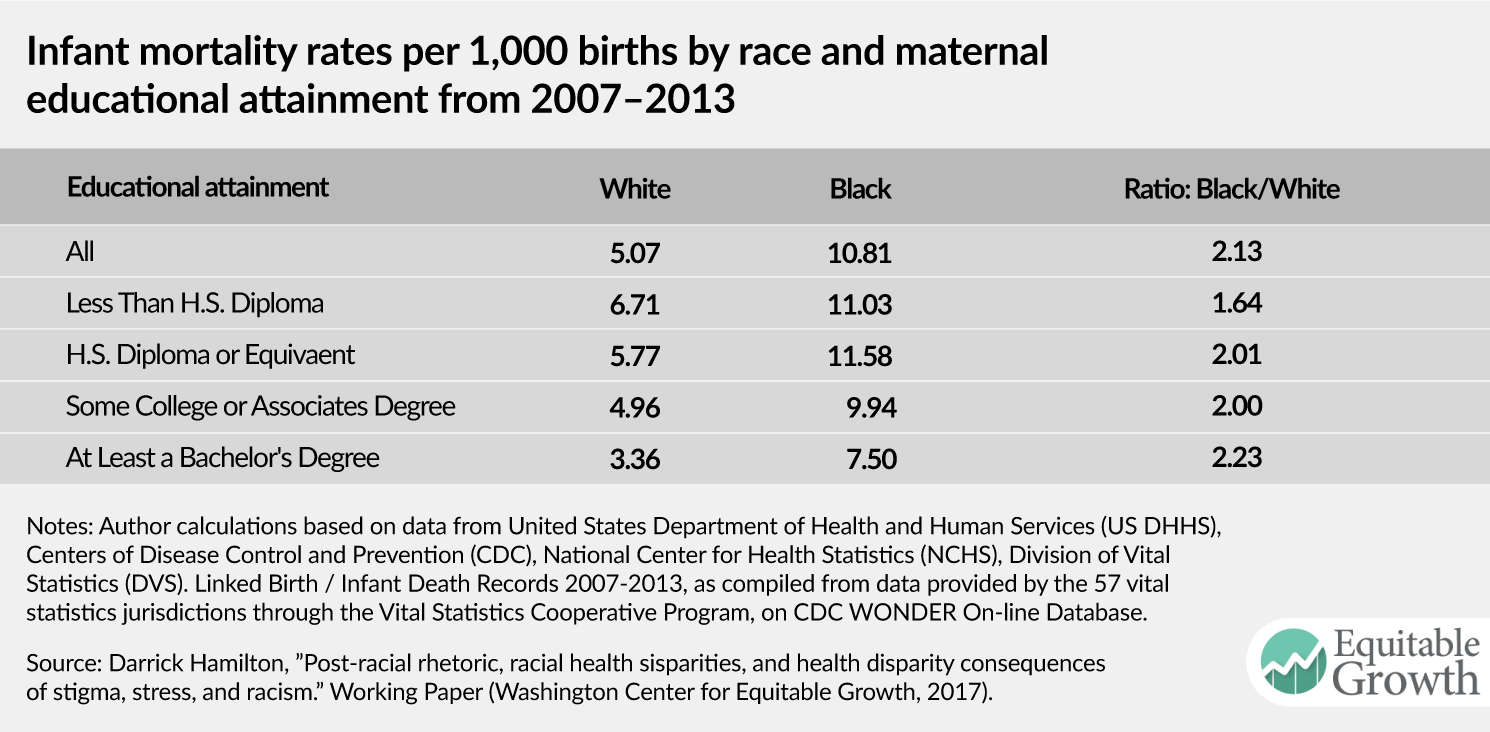

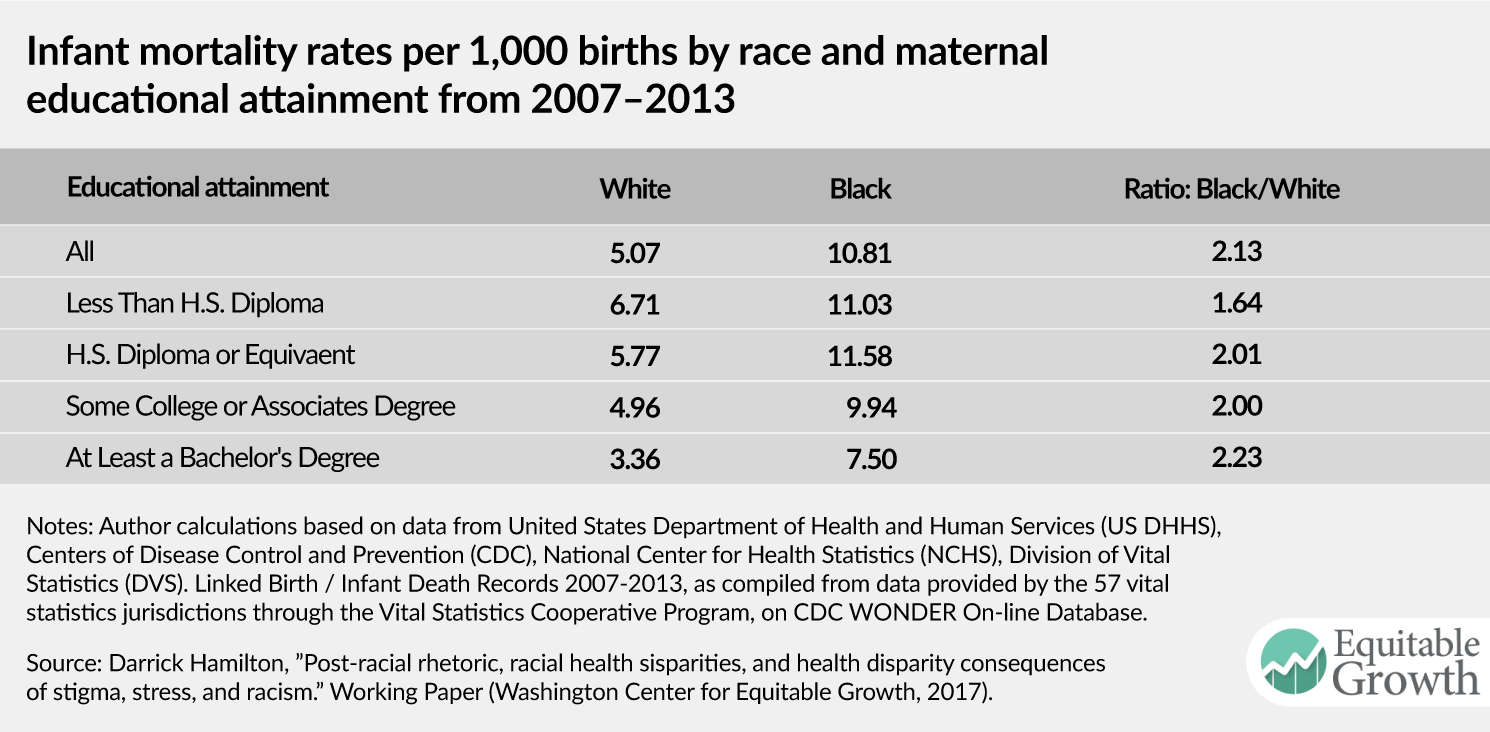

The first is by Darrick Hamilton, an associate professor of economics and urban policy at the New School for Social Research, who analyzes the role of racism and stigma in persistent racial health disparities. Hamilton also wrote a column on his research. He explains that racial health disparities persist for black Americans regardless of socioeconomic status and, paradoxically, often worsen with more education.

Jess Benhabib and Alberto Bisin, both professors of economics at New York University, wrote the other two papers, one with NYU Ph.D. Candidate Mi Luo. Both look at wealth distribution in the United States and why it’s so unequal. In a separate column, Nick Bunker digs into the papers’ main findings.

Bunker also has a new issue brief, which asks “Just how tight is the U.S. labor market?” As wage growth continues to be tepid, Bunker’s analysis shows that the labor market is not as tight as the low unemployment rate would have you believe.

Every month the U.S. Bureau of Labor Statistics releases data on hiring, firing, and other labor market flows from the Job Openings and Labor Turnover Survey, better known as JOLTs. We highlight a few key findings through graphs using data from the report.

In an op-ed for the Washington Business Journal, Heather Boushey argues that repealing and replacing D.C.’s paid family leave act won’t just harm workers, but businesses, and D.C.’s government and economy as well.

Links from around the web

In an interview published by ProMarket, Anat Admati, the George G.C. Parker Professor of Finance and Economics at the Graduate School of Business, Stanford University, argues that economists’ and governments’ failure to address the growing concentration of corporate power and rising inequality has had severe consequences for economic and political stability. [promarket]

Perry Stein writes about a new Georgetown University study that finds that Washington, D.C.’s growing economy is leaving the city’s longtime black residents behind, mirroring a trend in cities across the nation. The inequities, Perry writes, can be traced back to discriminatory practices that prevented black residents from participating in the economy. [washington post]

Tanvi Misra interviews MacArthur grant recipient and New York Times journalist, Nikole Hannah-Jones, about how the legacy of historic discriminatory practices is not the only factor in persistent racial segregation. Jones maintains that “there are policymakers who are making decisions right now that are maintaining segregation.” [citylab]

In today’s economy, only the most educated Americans can afford to pick up and move for better opportunities, a serious problem in a dynamic economy. Alana Semuels writes about how, even as jobs decline in some regions of the United States, skyrocketing housing costs in the best-paying cities prevent lower-income workers from relocating. [the atlantic]

Ana Swanson and Jim Tankersley examine a new International Monetary Fund report warning governments that they risk undermining global economic growth by cutting taxes on the wealthy. [new york times]

Friday Figure

From “Post-racial rhetoric, racial health disparities, and health disparity consequences of stigma, stress, and racism,” by Darrick Hamilton.