The causes and consequences for sustained economic development

Overview

Introduction

In 2013, the U.S. Chamber of Commerce commissioned a study aimed at undermining the U.S. Environmental Protection Agency’s claims that it and other federal agencies’ regulatory efforts created thousands of jobs. Naturally, the study challenged EPA’s findings, and it did so by questioning not only the agency’s findings but also its methods—particularly EPA’s economic models—arguing that there was little evidence that the agency even tried to analyze the effects of its regulations on employment.1 This disagreement is hardly novel. Anti-regulatory efforts often highlight the job-stifling effects of regulation while pro-regulatory positions often argue in favor of job growth and overall economic growth as a benefit of regulation. The debates often hinge on profoundly different economic models of employment—and employment patterns are notoriously complicated to model in the first place.

Download FileEnvironmental regulation and technological development in the U.S. auto industry

Read the full pdf in your browser

Rather than add to this noise about economic modeling, in this paper I will discuss the role of regulation in technological development, looking specifically at the automobile industry. New technologies are clearly related to economic growth, but modeling that relationship is complicated and actually requires consideration of “counterfactuals,” or assessing whether certain technologies would have emerged without regulation. Considering the question of how new technologies developed in response to regulation and then how those technologies affect job growth is even more complicated and beyond the scope of this paper. That’s why this paper focuses on the question of how regulation, technological development, and economic growth are linked by examining a multi-part, historical case study of the automobile industry and environmental regulations.

Exciting new series examines whether history can help us understand how technology impacts growth & inequality! https://t.co/wvEW2HO1xH

— Equitable Growth (@equitablegrowth) November 18, 2015

This paper considers the role of initial efforts in California to address the problem of smog and the ways in which that relatively small-scale regulation subsequently modeled and then fed a national-scale regulatory effort leading to the creation of the U.S. Environmental Protection Agency and the Clean Air Act and its amendments around 1970. This story, however, only begins with the Clean Air Act and the EPA because things got more interesting as emissions-control technologies took on a life of their own in the ensuing decades. Engineers started with a variety of ways to meet the new environmental regulations of the 1970s, but by the 1980s, controlling the emissions of the passenger car became an interesting engineering problem unto itself and led to an increasingly important role for electronics in the automobile. This period is also explored in this report.

The paper finds that regulation played an important catalytic role—it forced automobile companies to start to address emissions and air pollution, a move unlikely without regulation—but also that the ensuing thread of technological innovation gained a life of its own as engineers figured out how to optimize the combustion of the automobile in the 1980s and ‘90s, specifically using computer technology. In short, what began as a begrudging effort to reduce harmful emissions evolved into a successful reshaping of the automobile into a computer with an engine and wheels.

Technology-forcing regulation, emerging technologies, and the entrepreneurial state

In this section, I will lay out three ideas that will guide the forthcoming case study. The first is that regulation aimed at reducing air pollution in the 1970s was designed to force the auto industry to develop new devices to control vehicle emissions and exhaust—it was not a specification of what these devices might be or how they should operate. This approach of forcing firms to develop new technologies that will meet certain desired outcomes is a common feature of concerns about emerging technologies in the 21st century.

Second, an examination of the analyses aimed at unpacking the challenges faced by engineers who are obliged to develop emerging technologies is useful when considering the aims and effectiveness of technology-forcing regulation, as I do in this paper. And lastly, I will bring into the analysis the “entrepreneurial state” analytical framework of Mariana Mazzucato, a noted professor of the economics of innovation at the Science Policy Research Institute of the University of Sussex, as a way to consider the role of the state in encouraging technological development. Mazzucato writes about the various roles of the entrepreneurial state in building institutions to create long-term economic growth strategies, “de-risk” the private sector, and “welcome” or cushion short-term market failures.2 Rather than a laissez-faire state, an active, entrepreneurial state eases the route to economically important innovations produced by the private sector.

Technology-forcing regulation

Economists and others refer to legislation with a goal of forcing industry to develop new solutions to technological problems as “technology-forcing” regulation.3 The Clean Air Act of 1970, which targeted both stationary and mobile/non-point air pollution sources, is probably the most famous piece of technology-forcing legislation in U.S. history. The act specified that various industries meet standards for emissions for which no technologies existed when the act was passed. The Clean Air Act set standards that firms had to meet; importantly, the technology to be used to meet the standards was not specified, allowing firms to either develop or license a new device to add to the technological system in order to meet the stated criteria. Under this sort of technology-forcing regulation, both the electrical power utilities and the automobile industry developed a wide array of new exhaust-capturing and emission-modifying devices.

The legislative strategy of writing regulations that presented standards for which the current in-use technologies were insufficient was upheld by the U.S. Supreme Court in 1976 in the case of Union Electric Company v. Environmental Protection Agency. In this case, Union Electric challenged the Clean Air Act’s provision that a state could shut down a stationary source of pollution in order to force it to comply with a standard for which a feasible, commercial mediation technology did not yet exist.4 The U.S. Supreme Court upheld the legislation; states could set unfeasible emission limits where it was necessary to achieve National Ambient Air Quality Standards, which set limits on certain pollutants in ambient, outdoor air.5 Not upholding the statute would have opened the door to firms moving to locations with better air quality in order to emit more pollution and still keep the measured pollutants under the limits. This was where performance-based standards met technology-forcing regulations.

Emerging technologies

When the Clean Air Act was amended in 1977, Part C of the statute was dedicated to elaborating the regulation that said new sources in locations must meet or exceed National Ambient Air Quality Standards by using the “best available” technology. Part D stipulated that new plants in locations that did not meet these standards had to comply with the lowest achievable rate. There would be no havens for polluters under the Clean Air Act. These regulations spurred the development of commercially viable “scrubbers,” which remove sulfur from flue gases with efficiencies in the 90 percent range and were developed around 1980. The effort to use regulation to force power plants to develop technology to reduce smokestack pollution worked. There were all sorts of economic effects that require complicated cost-benefit analysis over the subsequent decade or so, but the regulation did what it intended in terms of forcing technological development to meet the needs of a cleaner environment.

In-use and emerging technologiesStepping back from the specifics of clean air regulations for a moment, let’s examine the ways in which already-in-use technologies can be modified by regulations. It may be helpful to compare and contrast in-use technologies or mature technologies with new or emerging technologies. The contrast between the two isn’t as strong as the vocabulary might suggest. Emerging technologies are simultaneously full of promise and danger, while mature technologies seem to be more predictable. The promises of a mature technology are manifest and the dangers seemingly under control. The term “emerging technology” is only used as an indicator that these technologies have the potential to go either way—toward a utopian or dystopian future. When change is easy, the need for it cannot be foreseen; when the need for change is apparent, change has become expensive, difficult, and time-consuming. Emerging technologies are often considered a particularly challenging regulatory problem. The “Collingridge dilemma” in the field of technology assessment—named after the late professor David Collingridge of the University of Aston’s Technology Policy Unit—posits that it is difficult, perhaps impossible, to anticipate the impacts of a new technology until it is widely in use, but once it is in wide use, it is then difficult or perhaps impossible to control the pace of change (regulate) of the technology precisely because it is in wide use.6 Nowadays, it is often common in economic and political rhetoric to hear of the speed of technological development as a further obstacle to effective regulation. |

Air quality regulation, in particular, was reactive rather than prophylactic in the case of air pollution coming from both the internal combustion engine and stationary sources such as electrical power plants. But it was also a long process that was highly responsive to both changing understandings of the chemistry of air pollution and to the development of a long list of diverse, new, pollution-reduction technologies. In the 1970s, when many of these technologies were developed, demonstrated, and ultimately introduced into the marketplace, the political rhetoric about regulation was less contentious than it is today. Regulation was seen as a legitimate role for the state and even companies who would be affected by regulations demanded that regulations be settled on. Uncertainty about regulation could be seen as a greater threat than regulation themselves.

Seeing in-use technologies as having common features with emerging technologies opens up a useful space to consider technological change. In what sense is today’s automobile the same technology as the carbureted, drum-brake-equipped, body-on-frame vehicle of the 1960s, let alone the hand-cranked Model T of 1908? There are many continuities, of course, in the evolution of the Model T to the present-day computer-controlled, fuel-injected, safety-system-equipped, unibody-constructed car. But looking at today versus 1908, one might argue that there’s actually little shared technology in the two devices. I would suggest that the category of mature technology isn’t an accurate one if “mature” implies any sense of stasis. As these technologies developed, there was definitely a sense of path dependency—that modifications to the technology were constrained by the in-use device.

This dynamic was especially true in systems that the driver interacted with, such as the braking system. Engineers working on antilock braking systems in the 1960s took it as a rule that they could not change the brakes in any way that would ask drivers to change their habits.8 But they were much more dynamic than the term “mature” implies and certain sub-systems—among them microprocessors, sensors, and software—were, in fact, classic, enabling emerging technologies.

So what happens if a mature technology like the automobile is treated like an emerging technology for regulatory or governance purposes? Perhaps the Collingridge dilemma should be taken seriously for any regulatory process and shouldn’t be focused on the uniqueness of regulating emerging technologies? The impacts of regulation can be as hard to anticipate for technologies that “emerged” as they are for technologies that are new to the market. The case of the automobile demonstrates this dilemma, since no one at the time anticipated the computerization of the car as an outcome of emissions and safety regulations in the 1970s.

Looking at game-changing regulation of in-use technologies does show, though, that Collingridge overstated the (inherent) difficulty of changing technologies once they’re on the market. The automobile’s changes since the 1970s are significantly driven by the regulation of technologies emerging in the 1960s and 1970s; in hindsight, the technology was changeable in far more ways than the historical actors imagined. This is an optimistic point about technological regulation—technologies are never set in stone.9

One difference between emerging technologies and automobiles is that the economic actors and stakeholders are much clearer in the case of cars. This means that government regulators need to work in concert with known industrial players. Regulation constitutes a collective effort between firms and regulatory agencies. This doesn’t mean the process isn’t antagonistic; in fact, because the interests of the state and private firms don’t align, the process is typically very contentious. But the way forward is not to eliminate or curtail either the state or private industry’s role. The standard-setting process itself is a critical one.10 The role of government, though, should be thought of as more entrepreneurial than bureaucratic in the case of technology-forcing regulation.

The role of government regulation in technological development

Mariana Mazzucato’s concept of the “Entrepreneurial State” offers a framework for thinking about how governments in the 21st century are able to facilitate the knowledge economy by investing in risky and uncertain developments where the social returns are greater than the private returns.11 States typically have three sets of tactics for intervening in technological development:

- Direct investment in research or tax incentives to corporations for research and development

- Procurement standards and consumption

- Technology-forcing regulation

Mazzucato’s book focuses on the first, but the other two played equally important roles in the second half of the 20th century.

Because there are multiple modes of state action, the state plays a central role in fostering and forcing the invention of new devices that offer social as well as, in the long run, private returns. My focus here is obviously on the role of regulation as a stick to force firms to develop in-use technologies in very particular ways. But it is important to recognize the way the federal government wrote the legislation that forced technological development. Lee Vinsel, assistant professor of science and technology studies at the Stevens Institute of Technology, calls these technology-forcing regulations “performance standards” because they were written to specify both a criteria and a testing protocol so that firms have wide latitude to design devices that could be considered to meet the criteria through standardized tests.12 Armed with the ideas that technologies are never static and mature and that the state plays critical and diverse roles in fostering and forcing new technologies to market, let’s now turn our attention to the phenomena of emissions regulations for automobiles since the late 1960s.

Setting standards for automobile emissions

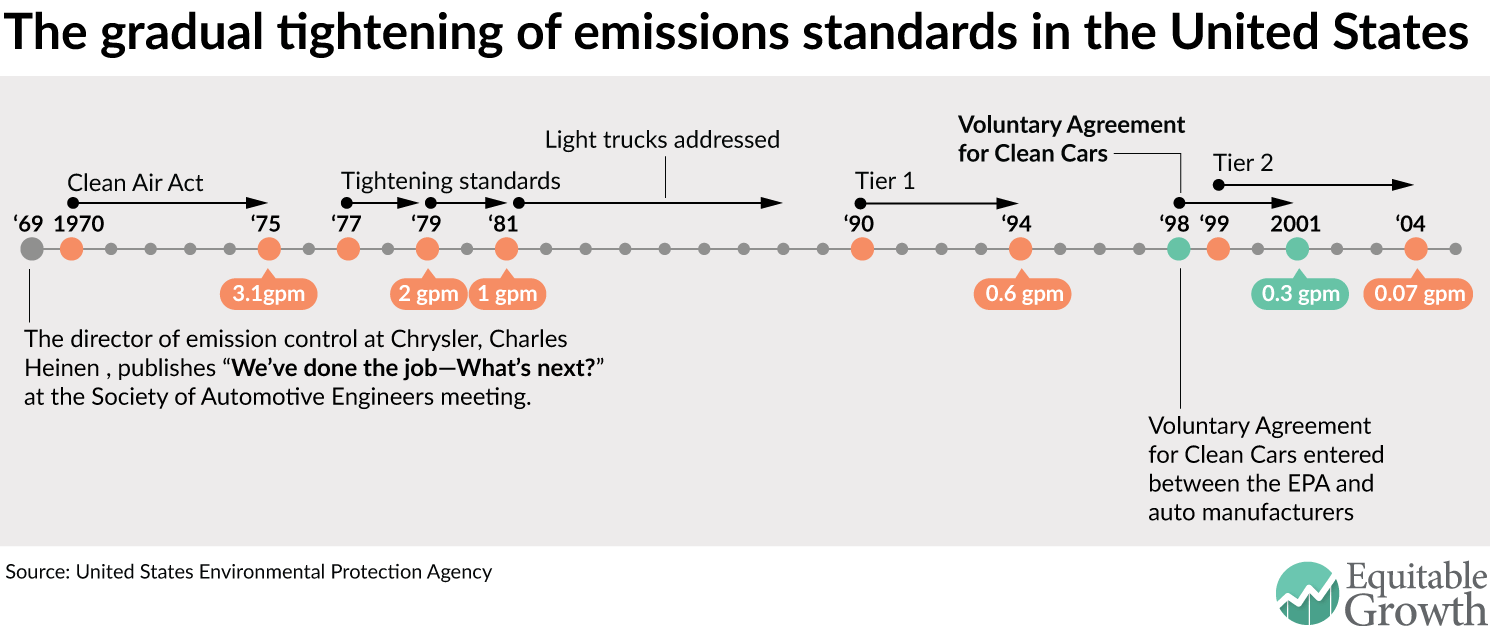

While I could provide a long timescale picture of the car’s development, my focus here is on the gradual computerization of the automobile in response to regulatory efforts to make it both safer and less polluting. Computer control of various functions of the automobile began first with electronic fuel injection and then antilock brakes in the late 1960s.13 The most important developments in electronic control occurred with the development of emissions-control technologies in the 1970s and 1980s, so that by about 1990 the automobile was being fundamentally re-engineered to be a functional computer on wheels. (See Figure 1.)

Figure 1

The implementation of these new systems was fairly gradual and followed a typical pattern of initial installation on expensive models that then trickled down to cheaper ones. Standardized parts and economies of scale are critical manufacturing techniques in the automobile industry, so there was and is often an incentive to use the same devices across different models. Therefore, when California led the creation of auto emissions regulations, the state was a large enough market that automobile manufacturers could almost treat the state standards as national ones. There was no way the auto industry would benefit from different emissions standards set state by state. Large markets such as California created an incentive for the automobile industry to want national standards instead of state-by-state regulation, even if some states might create much lower and easily achieved goals.14

The types and forms of clean air regulations were important to making them effectively force technological development. Regulations had to be predictable because dynamic standards would create higher levels of uncertainty, which makes firms nervous and unhappy. Technical personnel often advocated standards that could increase as new technologies were developed and implemented—engineers, in particular, often exhibited an epistemic preference for the “best” (the highest achievable) standards. This stance was particularly common for air pollution as there was no clear public health threshold about how much of nitrogen oxides or sulfur dioxide was dangerous or harmful. Many engineers thought the technologies should constantly improve and remove ever more chemicals known to be harmful to human lungs and ecosystems.

This debate about whether standards should either be static or instead increase with the development of ever more complicated technologies wasn’t just occurring inside government. In 1969, the director of emission control at Chrysler, Charles Heinen, presented a rather controversial paper titled “We’ve done the job—What’s Next?” at the Society of Automotive Engineers meeting that January. In the paper, Heinen lauded auto manufacturers for making exactly the needed improvements to clean up emissions and then pronounced the job done. Clearly speaking for the automotive industry, Heinen also questioned the health effects of automobile emissions, writing, “automobile exhaust is not the health problem it has been made out to be.”15 He argued that since life expectancies for Los Angeles residents were equal to or greater than national averages, the concentration of exhaust and the prevalence of smog could not be conclusively called dangerous to health. Furthermore, he argued that any further reductions in emissions would come only at a high cost—pegging the national cost to develop such technologies at $10 billion, and claiming that further reductions would likely come at the cost of decreased fuel economy for the user.

Heinen was positioning the industry against what he called catalytic afterburners to further burn off carbon dioxide, nitrogen oxides, and hydrocarbons. Yet some government regulators were specifically trying to force the development of catalytic afterburners or converters. The result was a victory for the regulators—by 1981, the three-way catalytic converter would become the favored technology to meet precisely the kind of escalating standards Heinen was worried about.

But it was a victory for quite a number of engineers, too. Phil Myers, a professor of mechanical engineering at the University of Wisconsin and president of the Society of Automotive Engineers in 1970-71, presented a paper in 1970 titled “Automobile Emissions—A Study in Environmental Benefits versus Technological Causes,” which argued that “we, as engineers concerned with technical and technological feasibilities and relative costs, have a special interest and role to play in the problem of air pollution.”16 He wrote that while analysis of the effects of various compounds wasn’t conclusive in either atmospheric chemistry or public health studies, engineers had an obligation to make evaluations and judgments.

To Professor Myers, this was the crucial professional duty of the engineer—to collect data where possible but to realize that data would never override the engineer’s responsibility to make judgments. He strengthened this position in a paper he presented the following year on “Technological Morality and the Automotive Engineer,” in a session he organized on Engineers and the Environment about bringing environmental ethics to engineers. Myers argued that engineers had a moral duty to design systems with the greatest benefits to society and not to be satisfied with systems that merely met requirements. At the end of his first paper in 1970, Myers also made his case for the role of engineers in both technological development and regulation, writing:

At this point, it would be simple for us as engineers to shrug our shoulders, argue that science is morally neutral and return to our computers. However, as stated by [the noted physician, technologist, and ethicist O. M.] Solandt, “even if science is morally neutral, it is then technology or the application of science that raises moral, social, and economic issues.” As stated in a different way by one of the top United States automotive engineers, “our industry recognizes that the wishes of the individual consumer are increasingly in conflict with the needs of society as a whole and that the design of our product is increasingly affected by this conflict.”17

Myers then cited atmospheric chemist E.J. Cassell’s proposal to control pollutants “to the greatest degree feasible employing the maximum technological capabilities.”18 For Myers, the beauty of Cassell’s proposal was its non-fixed nature—standards would change (become more stringent) as technologies were developed and their prices reduced through mass production, and when acute pollution episodes threatened human well-being, the notion of feasibility could also be ratcheted up. Uncertainty for Myers constituted a call to action whereas to Heinen it was a call to inaction. Heinen would have set fixed standards as both morally and economically superior—arguing “we’ve done the job.”

Myers also took a position with regard to the role of the engineer to spur consumer action. He wrote:

…our industry recognizes that the wishes of the individual consumer are increasingly in conflict with the needs of society as a whole and that the design of our product is increasingly affected by this conflict. This is clearly the case with pollution—an individual consumer will not voluntarily pay extra for a car with emissions control even though the needs of society as a whole may be for increasingly stringent emissions control. …there is universal agreement that at some time in the future the growth of the automobile population will exceed the effect of present and proposed controls and that if no further action is taken mass rates of addition of pollutants will rise again.19

Myers was arguing that federal emissions standards should be written such that they can become more stringent in the near future but also that regulation was a necessary piece of this system because it would serve to force the consumer’s hand (or to put it another way, to force the market). According to Myers, engineers were the ideal mediators of these processes.

In response to these debates, which occurred among government regulators, industry experts, and technical professionals, the Clean Air Act and its subsequent amendments set multiple standards.20 Fixed, performance-based standards, which changed more often than Myers would have predicted, became the norm, but only after a disagreement about whether to measure exhaust concentration or mass-per-vehicle-mile. The latter was written into the Clean Air Act and remained the standard, but not without attack from those who argued that the exhaust concentration was a better proxy for clean emissions.

The initial specified standards under the Clear Air Act covered carbon monoxide, volatile organic compounds (typically unburned hydrocarbons or gasoline fumes), and nitrogen oxides. Looking only at nitrogen oxides as an example, the standards became significantly tougher over 30 years. The initial standard for passenger cars was 3.1 grams per mile, which automobile manufacturers had to show they had achieved by 1975.21 With the 1977 amendments, the requirement would drop to 2 gpm by 1979 and to 1 gpm by 1981.

The 1990 Clean Air Act Amendment set a new standard called “Tier 1,” which specified a 40 percent reduction and moved the standard for nitrogen oxides emissions in passenger cars down to 0.6 gpm. Then, in 1998, the Voluntary Agreement for Cleaner Cars between the EPA, the automobile manufacturers, and several northeastern states again reduced the nitrogen oxides goal by another 50 percent to 0.3 gpm (just 10 percent of the original standard from 1975) by 2001. This agreement was unusual in that it was not mandated by the Clean Air Act but would nonetheless affect cars nationally.

At the same time as the Voluntary Agreement for Cleaner Cars was unveiled, the EPA issued the Tier 2 nitrogen oxides standards, which reduced the standard to 0.07 gpm by 2004. Tier 2 also specified a change in the formulation of gasoline so that these and other standards were more readily achievable. So in the 30 years between 1975 and 2005, nitrogen oxides emission standards were reduced by approximately 98 percent. What did auto manufacturers have to do to meet these seemingly draconian standards?

Meeting emissions standards: The catalytic converter

The general strategy of automobile engineers for meeting California standards in the 1960s and federal standards in the first half of the 1970s was to add devices to the car to catch, and often reburn or chemically transform, unwanted exhaust and emission gases22. The first several add-ons were to reduce unburned hydrocarbons (gasoline fumes), which were targeted in 1965, before the Clean Air Act, by the Motor Vehicle Air Pollution Control Act. Once the focus shifted to nitrogen oxides in the 1970s, the challenge for engineers was to invent technologies that didn’t undo a decade’s worth of hydrocarbon reduction. Nitrogen oxides are formed through an endothermic process in an engine and are produced only at high combustion temperatures, greater than 1,600 degrees centigrade. Therefore, one key to reducing them was reducing the temperature of combustion, but in the 1960s, combustion temperatures had often been increased to more completely burn hydrocarbons. Reducing combustion temperatures threatened to increase unburned hydrocarbons.

The solution was to invent a device that would only engage once the engine was hot and would at that point reduce combustion temperature. The first of these devices was the engine gas recirculation system—a technology that required a temperature sensor in the car’s engine to tell it when to engage. This wasn’t the first automotive technology reliant on sensors, but sensors of all kinds would become increasingly important in the technologies developed to meet the ever-rising nitrogen oxides standards. Sensors were also not as reliable as either automobile manufacturers or car owners wanted, and the engine gas recirculation system was the first of many technologies best known to drivers by lighting up the dashboard “check engine” indicator. The new technology was useful but it failed to offer enough change in the chemistry of combustion to meet the falling nitrogen oxides allowances. A more complex add-on device was in the near future.

Members of the Society of American Engineers first started to hear about devices to transform vehicle exhaust gases using catalysts in 1973. European and Japanese engineers were very common in these sessions, as they were convinced that catalytic emissions technologies were going to be required by their governments (in contrast to the performance-standards approach already in place in the United States). It wasn’t that U.S.-based engineers opposed catalytic devices, but they knew that the EPA was concerned with the testable emissions standards rather than the use of any particular device.

The catalytic converters of the 1970s could transform several different exhaust gases. Engineers were particularly interested in the transformation of carbon monoxide into carbon dioxide, changing unburned hydrocarbons into carbon dioxide and water, and dealing with nitrogen oxides by breaking them apart into nitrogen and oxygen. The converters presented great promise for these reactions, and converters that performed all three reactions were called three-way catalytic converters.

But catalytic converters also presented challenges to auto manufacturers. The catalysts in them were easily fouled; that is, other materials bonded with the catalysts and prevented them from catalyzing the reactions they were supposed to generate. The most common fouling substance was lead, which in the 1970s was added to gasoline to prevent engines from knocking. Lead had to be removed from gasoline formulations if catalytic converters were to be used. This had to be negotiated between the federal government, the oil industry, and the automobile industry, none of which were satisfied with the solution.

Second, the catalytic converters depended on a reliable exhaust gas as an input. This meant that to use the converter on different cars, it had to receive the same chemical compounds, and therefore each model of vehicle had to have the same pre-converter emissions technologies.

Lastly, the catalytic converter depended on a consistent combustion temperature, which required more temperature and oxygen sensors in the engine. Along with electronic fuel injection, which was increasingly replacing carburetion as a technique for vaporizing the car’s fuel (mixing fuel and air) as it entered the intake manifold or cylinder, engine gas recirculation systems and catalytic converters were the chief engine technologies that were driving the move to computer control in the car. All three of these technologies depended on data input from sensors, logic control, and relays that engage the system under the right conditions.

Reconceptualizing combustion

Once the catalytic converter was introduced and increasingly made standard in the late 1970s and 1980s, work to further reduce nitrogen oxides emissions depended on either continuous improvements to the catalytic converter or the addition of new technologies. But many engineers, especially those working on more expensive models who had more opportunity to design new systems and not resort to “off the shelf” emission-reduction technologies that were becoming more and more common, were dissatisfied with the Rube Goldberg-like contraption that the car’s emissions control was becoming. There had to be a better design that would make the targets achievable. The promise lay in managing the whole combustion process—not once in a while to turn on an engine gas recirculation system, but continuously to optimize combustion with respect to undesirable emission compounds. Developing the technologies to manage combustion electronically took another decade.

Combustion management started to appear with the gasoline direct injection approach, which began development in earnest in the 1970s and started to appear on the market in the 1990s.23 Gasoline direct injection required the car to have a central computer or processing unit to read input data from a series of sensors and produce electric signals to make combustion as clean as possible, especially focused on the production of nitrogen oxides. Gasoline direct injection changed the ratio of fuel to air continuously. A warm engine under no load could burn a very lean mixture of fuel, while a cold engine or an engine under heavy load needed a different mixture to keep nitrogen oxides at the lowest possible level.

Yet early introductions of this new technology, such as Ford’s PROCO system on the late 1970s Crown Victoria, failed to meet increasingly stringent standards and production of the system ended by being canceled. It took well over a decade to mature the gasoline direct injection technology into a system that could help achieve the Tiers 1 and 2 nitrogen oxides standards.

Other technologies aided in the approach that combustion could be managed to optimize output under widely different conditions. Variable Valve Timing and Lift systems, which are often characterized generally as VTEC systems even though that’s the trademarked name of Honda’s system, custom control the timing and degree of an engine’s valves opening. These are mechanically and electronically complex systems that can improve performance and reduce emissions and which require data analysis by a computer processing unit. They were introduced in the late 1990s.

By the 1990s, clean combustion was the goal of automotive engine designers. The car should produce as few exhaust gases as possible, beyond carbon dioxide, water, and essentially air, composed primarily of oxygen and nitrogen. The carbon dioxide output is really the issue today, as it is now the primary pollutant produced by the automobile. It’s not that earlier engineers characterized it as inert but rather that combustion will inevitably generate carbon dioxide in quantities far too large to either capture or catalyze. There is no catalytic path to eliminating carbon dioxide after the combustion cycle, and combustion necessarily produces carbon dioxide. And capturing it presents insurmountable problems due to volume and the obvious question of storage. Already in 1970, Phil Myers did, perhaps surprisingly, raise the question of carbon dioxide: “increasing concern about [CO2’s] potential for modifying the energy balance of the earth, that is the greenhouse effect.” And Myers called CO2 the automobile’s “most widely distributed and abundant pollutant.”24 The internal combustion engine is more than a carbon-dioxide-producing machine, but it is nothing less than that either.

The recent scandal over Volkswagen’s computerized defeat device falls directly into this concern over computer control of combustion and its output of nitrogen oxides, although the engines currently under scrutiny with VW are diesel-powered and not gasoline engines, and are therefore subject to different Clean Air Act regulations and involve some different systems and technologies than those that are described in this paper. Still, it is not surprising that nitrogen oxides are the pollutants that the VW engines produce in excess of regulatory levels.

The technologies designed since the 1970s to produce such a striking reduction in nitrogen oxides all presented trade-offs with vehicles’ performance and gas mileage. Gas mileage is also the subject of federal regulation, but the corporate average fuel economy standards, or CAFE standards, only specify an average for an entire class of vehicles. Volkswagen’s choice to implement software that would override the nitrogen-oxides-controlling systems except when a vehicle was under the precise conditions of testing was a choice to privilege what they perceived as customers’ preferences over meeting U.S. requirements for pollution emission. There’s much to say about VW, but little of it involves the subject of this paper—the role of regulation in generating socially desirable innovations.

Conclusion: Regulation, new technologies, and economic growth

The purpose of the paper was to analyze the historical interactions between regulation and technological development. In the case of automotive innovations, it is clear that high emissions standards did force the development of new technologies by jumpstarting a quest to improve the car, to make it less environmentally taxing and harmful to human health. More importantly, the continually escalating emissions standards, exemplified here by increasingly stringent nitrogen oxides standards, led to fundamental changes in the car that made it not only less polluting but also more reliable as a (largely unanticipated) byproduct of computerization.

Cars also have become much safer through regulation, with deaths per miles driven dropping from approximately 20.6 deaths per 100,000 people in 1975 to about 10.3 per 100,000 in 2013—a drop of 50 percent.25 Few of the technologies used to improve the car existed prior to the enactment of legislation.26 Technology-forcing regulations can be effective and the opposition of industries affected by them is usually temporary, only a factor until new technologies are available. Given the complexity of many of today’s technological challenges, especially those in the energy sector and involving climate change, the U.S. government needs to consider its role as a driver of technological change.

The link between regulation and technological innovation in automobiles has had a variety of complicated effects on economic growth and the labor market—from creating new jobs to produce new automotive electronic technologies to changing the economic environment of the repair garage. This process of continuous innovation in response to regulation has continued since the 1990s, and now we face the challenges and opportunities of self-driving cars or autonomous vehicles. The adoption of these new technologies for a new kind of car over the next decades will require major changes in legislation, in driver behavior and attitudes, and of course in technologies themselves, including large technological systems. All of these will have important economic effects, some of which are fairly predictable, others of which are not, but all of which will certainly be politicized by interested parties.

About the author

Ann Johnson is an associate professor of science and technology studies at Cornell University. Her work explores how engineers design new technologies, particularly in the fields of automobiles, nanotechnology, and as a tool of nation-state building.

Acknowledgements

Special thanks to W. Patrick McCray, David C. Brock, Lee Jared Vinsel, James Rodger Fleming, Adelheid Voskuhl, and Cyrus Mody for conversations and comments, and the other members of the History of Technology workshop at the Washington Center for Equitable Growth, especially Jonathan Moreno and Ed Paisley.

End Notes

1. Benjamin Goad, “Chamber study claims to debunk EPA figures on job-creating regulations,” The Hill, February 27, 2013, available at http://thehill.com/regulation/energy-environment/285359-chamber-study-claims-to-debunk-epas-job-creation-figures.

2. Mariana Mazzucato, The Entrepreneurial State: Debunking Public vs. Private Sector Myths (London: Anthem Press, 2013), 6, 196.

3. “Forcing Technology: The Clean Air Act Experience,” The Yale Law Journal, Vol. 88, No. 8 (July 1979):1713-1734; David Gerard and Lester Lave, “Implementing Technology-Forcing Policies: The 1970 Clean Air Act Amendments and the Introduction of Advanced Automotive Emissions Controls,” Technological Forecasting and Social Change, Vol. 72, No. 7 (Sept. 2005):761-778; Gerard and Lave, “Experiments in Technology Forcing,” International Journal of Technology Policy and Management, Vol. 7 No. 1 (2007):1-14.

4. “Forcing Technology: The Clean Air Act Experience.”

5. See “National Ambient Air Quality Standards,” available at http://www3.epa.gov/ttn/naaqs/criteria.html.

6. David Collingridge, The Social Control of Technology (New York: St Martin’s Press, 1980).

7. The Royal Society and Royal Academy of Engineering, “Nanoscience and Nanotechnology: Opportunities and Uncertainties,” 69-70, available at https://royalsociety.org/~/media/Royal_Society_Content/policy/publications/2004/9693.pdf; Cass R. Sunstein, “The Paralyzing Principle,” Regulation (Winter 2002-2003):32-37, available at https://www.heartland.org/sites/default/files/v25n4-9.pdf.

8. Ironically, ABS turned out to do exactly this, asking drivers to stop pumping the brakes in an emergency stop and apply them firmly instead. See Ann Johnson, Hitting the Brakes: Engineering Design and the Production of Knowledge (Durham, NC: Duke University Press, 2009).

9. I am, however, not claiming that all changes have had positive outcomes or that outcomes haven’t been dominated by unintended consequences at times.

10. Lee Vinsel does a very good job working through this process in “Designing to the Test: Performance Standards and Technological Change in the U.S. Automobile after 1966,” Technology and Culture Vol. 56 No. 4 (2015):868-894.

11. Mazzucato, The Entrepreneurial State, 3-4.

12. Vinsel, “Designing to the Test,” 871.

13. Johnson, Hitting the Brakes.

14. The variation in emissions testing regimes of in-service automobiles is an example of the wide latitude for emissions regulation at the state level.

15. Charles Heinen, “We’ve done the job—What’s Next?”, SAE Paper 690539 (1969).

16. P.S. Myers, “Automobile Emissions—A Study in Environmental Benefits versus Technological Costs,” SAE Paper 700182 (1970): 658.

17. Myers, “Automobile Emissions,” 672.

18. E.J. Cassell, “Are we ready for Ambient Air Quality Standards?”, Journal of the Air Pollution Control Association Vol. 18 (1968):799.

19. Myers, “Automobile Emissions,” 672.

20. EPA Emissions Facts, “The History of Reducing Tailpipe Emissions,” available at http://www3.epa.gov/otaq/consumer/f99017.pdf.

21. Emissions standards take into account what class a vehicle is, and these are technical classes that may or may not cohere with what drivers call a particular vehicle. For simplicity’s sake here, I’ll only write about passenger cars, but all vehicle classes had standards for NOx. Initially, the EPA’s emissions measures were in parts per million; in 1990, the measure changed to grams per mile (gpm)—but here I’ll use gpm for the whole discussion just for clarity’s sake.

22. Richard Chase Dunn and Ann Johnson, “Chasing Molecules: Chemistry and Technology for Automotive Emissions Control.” In James Rodger Fleming and Ann Johnson, eds., Toxic Airs: Body, Place, and Planet in Historical Perspective (University of Pittsburgh Press, 2014).

23. There are many earlier precursors to GDI, but they’re not relevant to the systems developed in the ‘70s and ‘80s for combustion management.

24. Myers, “Automobile Emissions,” 660.

25. Insurance Institute for Highway Safety, “General Statistics,” available at http://www.iihs.org/iihs/topics/t/general-statistics/topicoverview.

26. One of the claims Ralph Nader makes in Unsafe at Any Speed is that automobile companies were withholding technologies that would benefit the public good. The passage of legislation revealed that while this was occasionally the case, more often than not the legislation spurred intensive in-house R&D and licensing agreements between suppliers to meet the new standards for both auto safety and emissions. In several examples, however, Nader is attacking general design principles that rendered cars unsafe rather than indicting car companies for withholding new technologies. Ralph Nader, Unsafe at Any Speed (New York: Grossman Publishers, 1965).

Explore the Equitable Growth network of experts around the country and get answers to today's most pressing questions!